Microsoft Chat Copilot vs. Azure ChatGPT - Which generative AI capability to choose for the enterprise?

I've written previously about Azure OpenAI (A practical look at using and building on Azure OpenAI, specifically the "ChatGPT-style" feature), Azure ChatGPT (Deploying an Enterprise ChatGPT with Azure OpenAI), and how to build your service with custom data (Building a private ChatGPT service without custom code using Azure OpenAI).

Today, I'll discuss something I frequently discuss with companies: creating an internal and private ChatGPT-style service that utilizes Azure OpenAI. You could build your service, but consider using one of the prototypes to save time and effort.

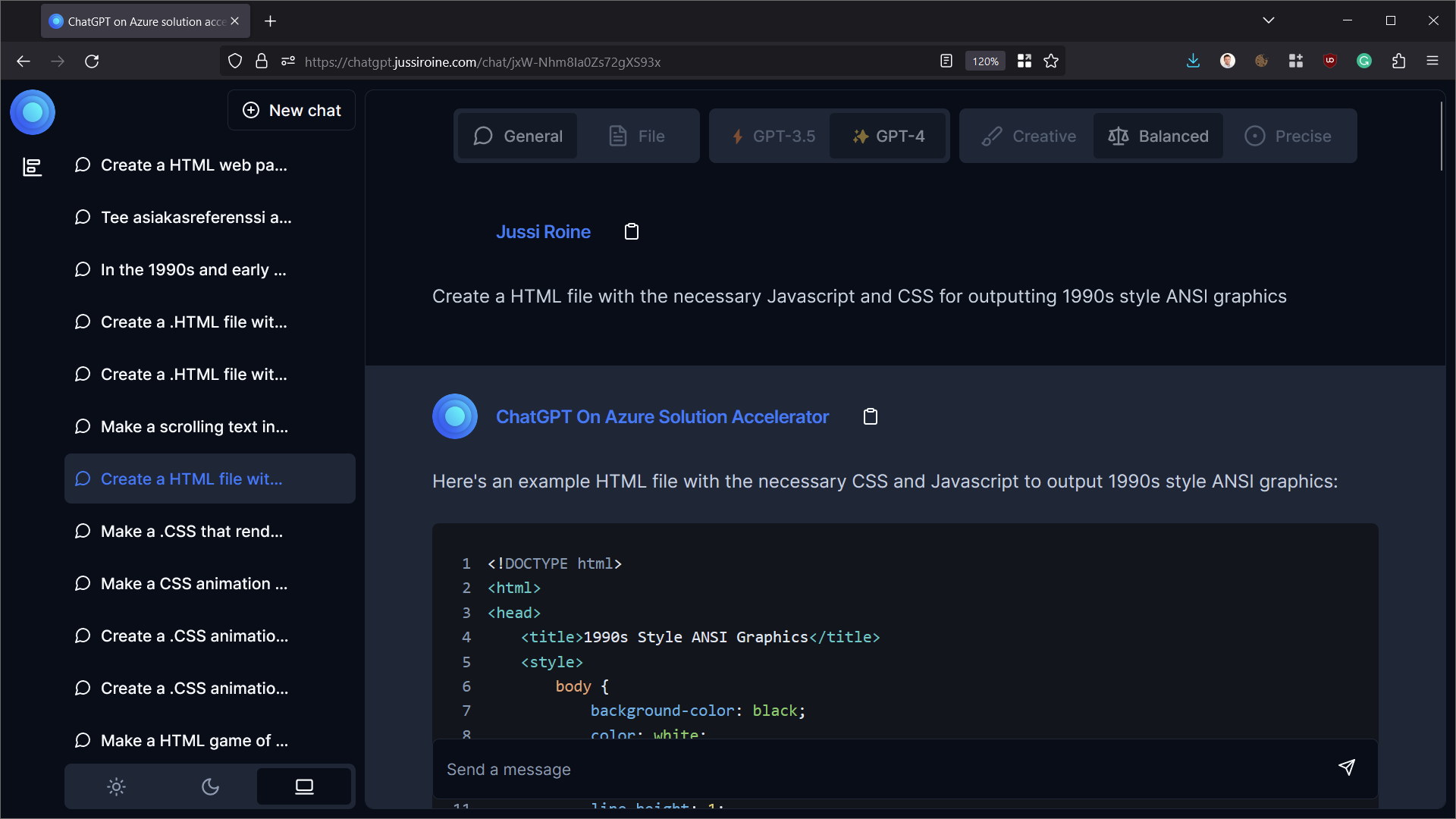

Azure ChatGPT

First, Azure ChatGPT - I wrote about it previously - is the open-source implementation of a ChatGPT web interface. Sadly, Microsoft pulled the repo for an unknown reason, and hasn't been made public again. You can check the source code from my fork here. This service utilizes Azure Web App to host the interface and Azure Cognitive Search as a document vector database. It works, and it's pretty good. It uses Cosmos DB for chat history.

It can utilize GPT-3.5 Turbo and GPT-4 and is easy to use.

I use it daily in my private instance - mainly because it's handy, and I can secure it in Azure.

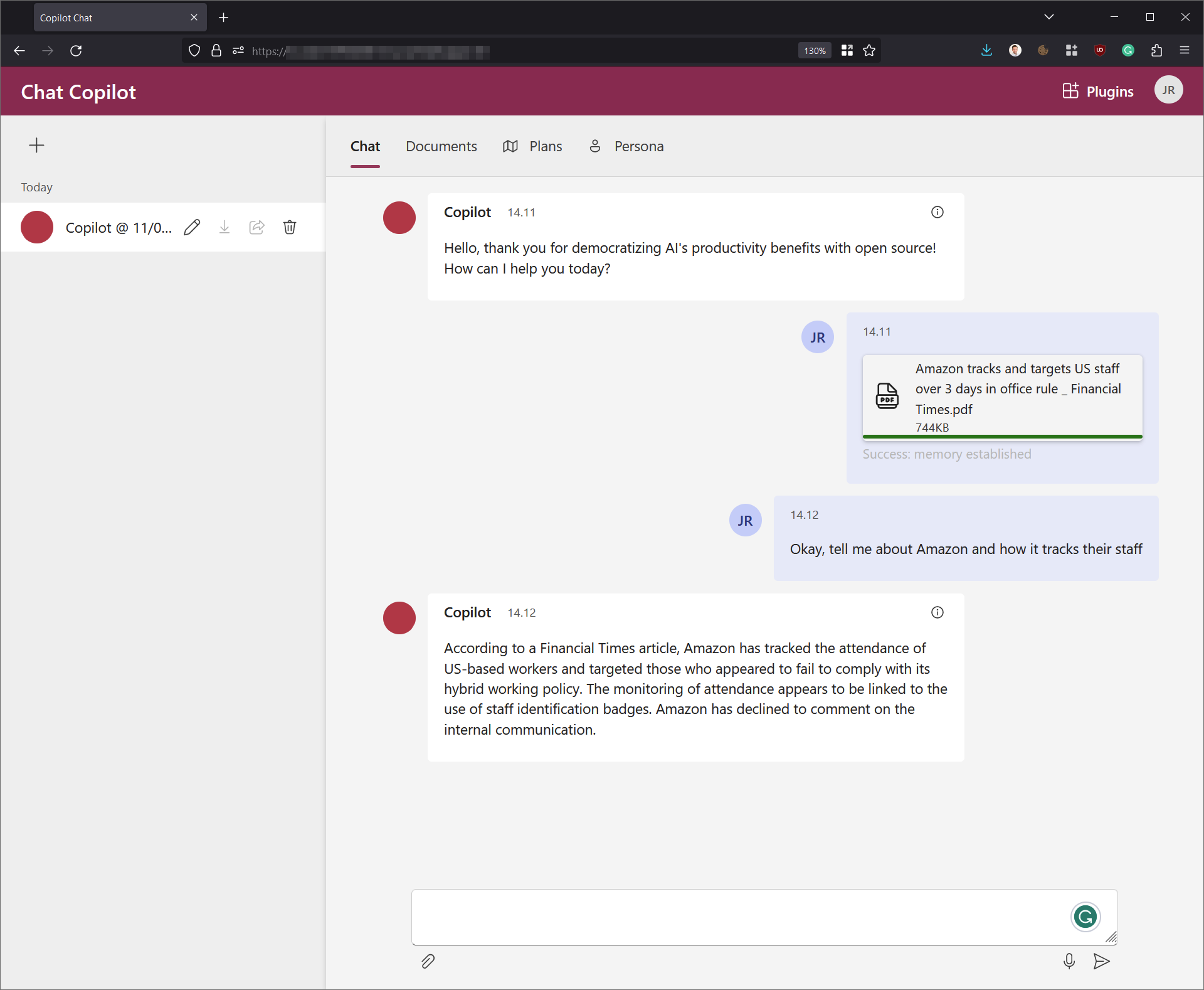

Chat Copilot

The other viable option is Microsoft's Chat Copilot. This is not Microsoft 365 Copilot or other commercial Copilot offerings. This prototype or proof of concept project hosts the web interface on an Azure Static Web App, and the API is made with .NET Core. It can utilize Cosmos DB for memory (storing chats, etc.) and Azure Cognitive Search to store embeddings.

The repo for Chat Copilot is here.

I'm more comfortable with Chat Copilot, as the API is made with .NET Core, and I can troubleshoot it quickly with Visual Studio 2022 running a local copy.

Chat Copilot is also a bit more comprehensive: it has built-in support for plugins, and you can modify the personas (system messages).

Differences between Azure ChatGPT and Chat Copilot

The main difference between Azure ChatGPT and Chat Copilot is that they use different approaches to accessing your Generative AI models. Azure ChatGPT utilizes Langchain - a Python-based framework for connecting to models. Chat Copilot utilizes Semantic Kernel - Microsoft's SDK for achieving much of the same.

The same Azure OpenAI models are usually utilized for both - GPT-3.5-Turbo and text-embedding-ada-002.

Azure ChatGPT is rock solid. Once it's up and running, it just works. Users authenticate via Microsoft Entra ID and can upload documents or utilize the ChatGPT-style chat.

Chat Copilot aims to be more modern. It's also much more fragile. Setting it up took hours, as I could get it running localhost , but pushing to Azure broke something with the API. There are numerous dependencies; updating these when things change isn't trivial. The interface for Chat Copilot is more advanced, on the other hand.

Should you wait for Microsoft 365 Copilot?

That is a good question. When writing, Microsoft 365 Copilot is still in private preview. The service, eventually, will be vastly more helpful than just a chatbox. The two services I'm running - Azure ChatGPT and Chat Copilot - are more sample projects you can use to build your Copilot-style service.

The apparent downside of Microsoft 365 Copilot is that it will cost you $30/user/month. The two other services do not have a per-user licensing attached - just Azure consumption.

I see a huge potential for capable companies to build their internal services using these two projects as a starting point. Microsoft 365 Copilot is more of a fixed service that runs at the will and vision of Microsoft.

In closing

We live in exciting times, as a new capability or opportunity pops up each week with generative AI. At the end of the day, it's pretty simple: utilize Azure OpenAI for your generative AI needs. Choose a suitable UI layer, and utilize Azure Cognitive Search for vector indexes and Cosmos DB for history. That's mostly it. Everything else - such as Microsoft 365 Copilot - is an add-on and perhaps more suitable for end-users than businesses building their tooling.