Getting started with DALL-E 3 on Azure OpenAI

Generative AI is usually associated with ChatGPT - a splendid service that feels very intelligent. DALL-E, another generative AI model that can generate images from text prompts, is perhaps lesser known but widely used simultaneously. Bing now allows DALL-E 3 to generate clever images in a breeze.

The DALL-E 3 model is one of the most advanced and is now available under Azure OpenAI. Let's take a look!

Brief primer - what was Azure OpenAI again?

Azure OpenAI is an Azure-hosted PaaS that utilizes the same Large Language Model of OpenAI's ChatGPT - GPT-3.5 Turbo and GPT-4. The significant difference is that you control where the service resides (West Europe, US East, etc.) and manage authentication, authorization, usage, telemetry, monitoring, and all other aspects like any other Azure-provided PaaS service.

For anyone building business apps that rely on generative AI, and specifically the models initially made by OpenAI, I feel Azure OpenAI is the only viable option for hosting and inferencing.

Provisioning the DALL-E 3 model

Right now, it's a bit of a mess. You have the old Azure OpenAI Studio that allows you to provision the DALL-E 3 model. And then there's the new and shiny Azure AI Studio, which - for some odd reason - doesn't allow you to provision the DALL-E 3 model.

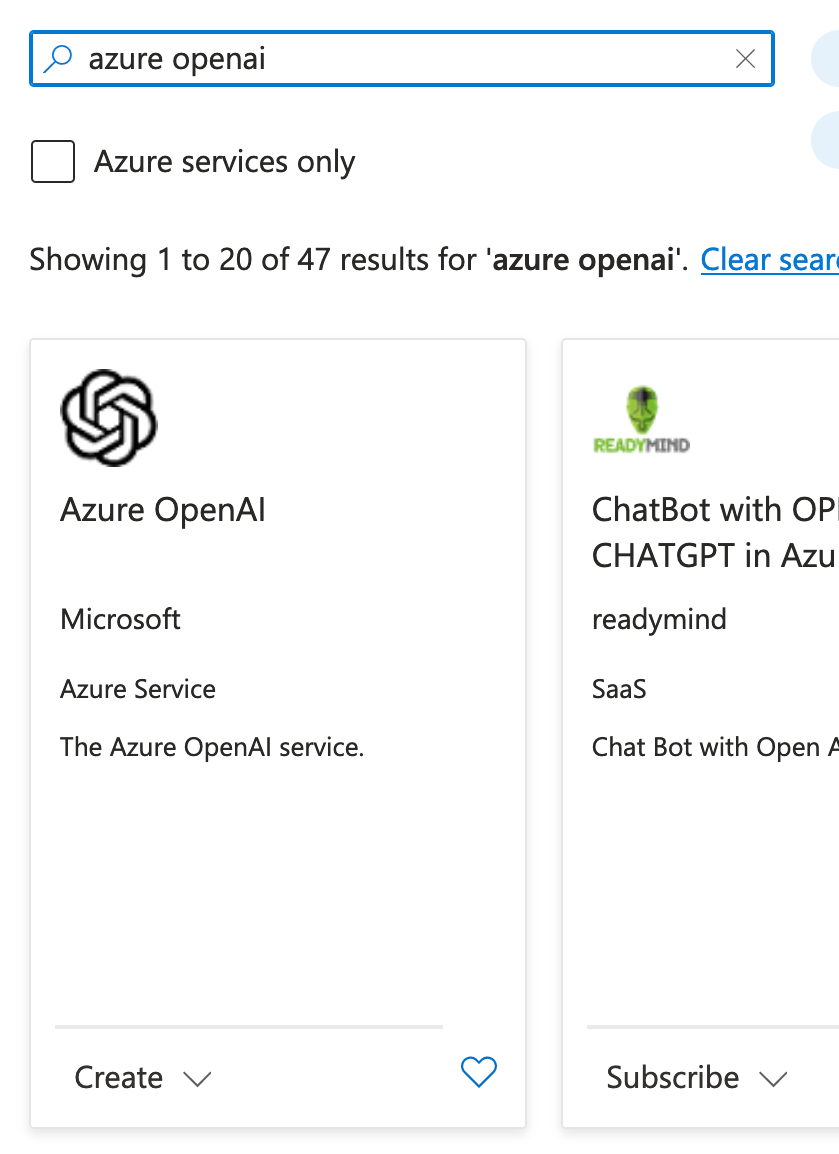

For this to work, you'll first have to create a new instance of the Azure OpenAI service. Head on over to the Azure Portal and create a new instance of Azure OpenAI.

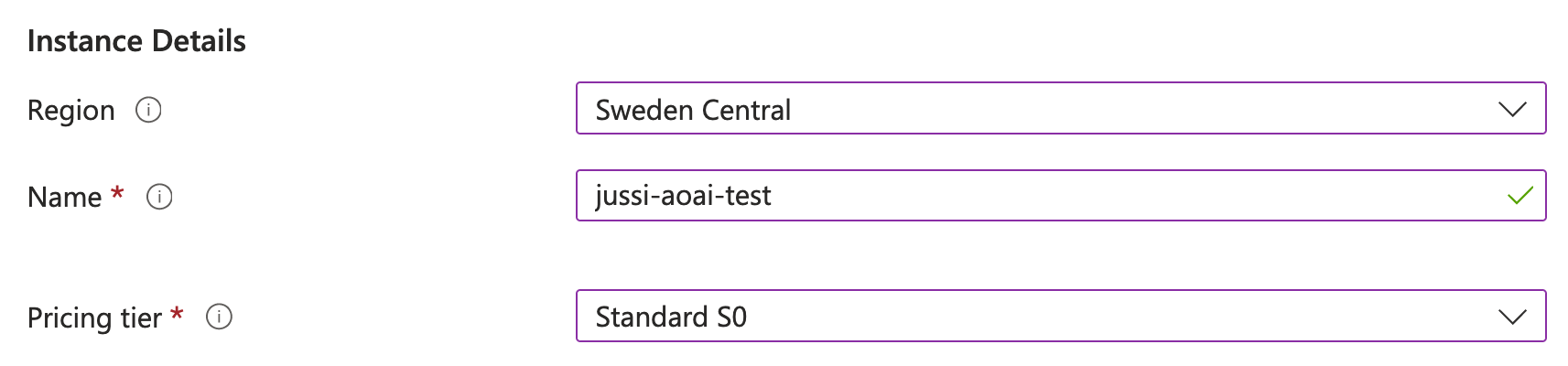

Your best bet now is to choose Sweden Central for the region if you're in Europe. I find that the Swedes usually have ample GPU capacity available. Right now, DALL-E 3 is not available in other Azure regions.

Once created - and this usually takes just a few seconds - click Model deployments and then click Manage deployments. This takes you to the older Azure OpenAI Studio at https://oai.azure.com/.

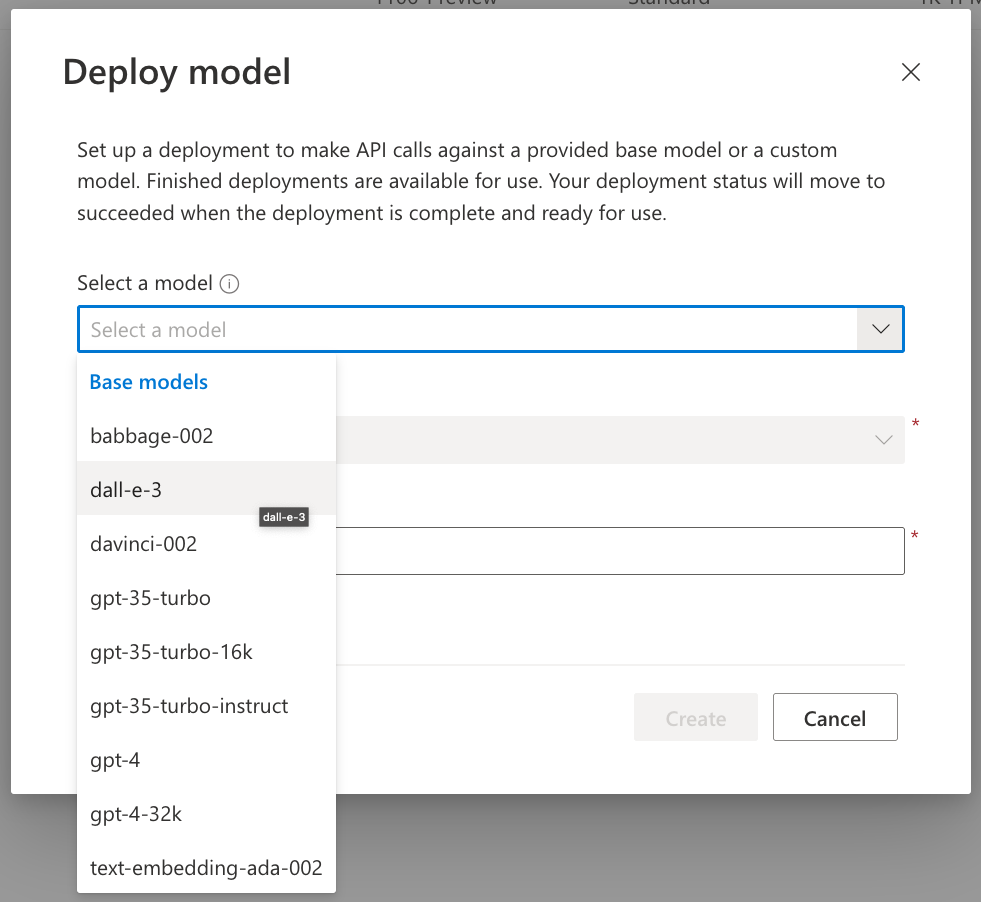

You can now provision the actual DALL-E 3 model by clicking Create new deployment.

I generally suggest naming the models to their base model names. This makes it easier to later associate them in your apps and code.

Trying out the DALL-E 3 model

While in Azure OpenAI Studio, you can now try the model immediately. Keep in mind that it isn't free per se. The cost per 100 images generated is 1.822 euros. That means each image generated costs you 1.8 cents.

Click on DALL-E (Preview) within Azure OpenAI Studio navigation. And now, type up your prompt to start generating images. Here are a few fun ones I prompted last night.

Prompt: a consultant sitting in a meeting room with laptops, a rack full of servers and network cables in LEGO

It's pretty good for a first try. There are some slight issues with the rack servers, and they look a bit off.

Let's try another one.

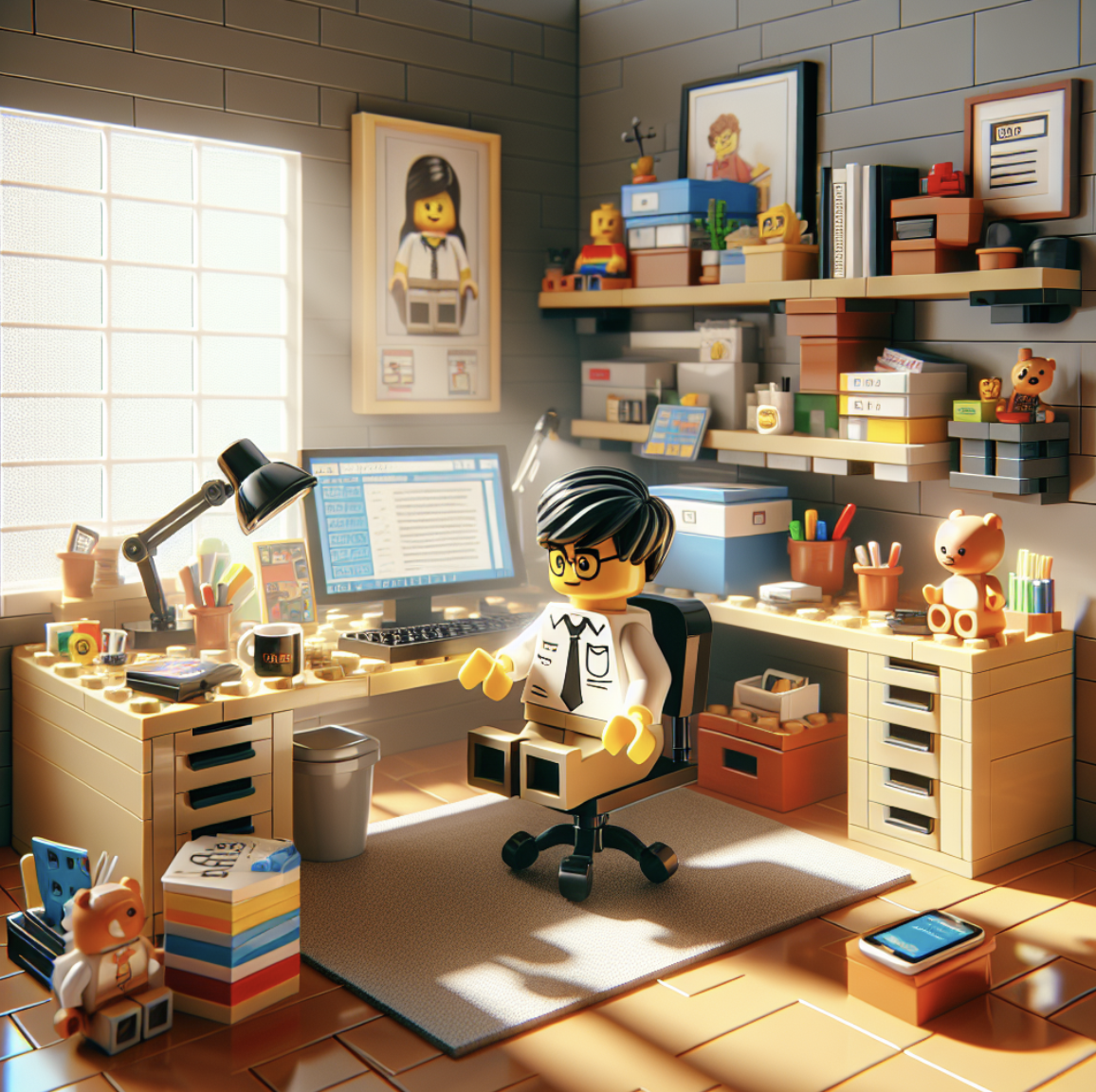

Prompt: a consultant home office in LEGO

Again, it's pretty great! The coffee mug is an excellent little detail, as well as the teddy bears—no apparent issues in this picture.

One more!

Prompt: a sloth playing a Spiderman game on a PlayStation

Sweet! The PlayStation is a bit off, but it's pretty close still.

It takes about 15-20 seconds to generate an image at 1024*1024 resolution. If you fiddle with the (limited) settings in the playground, you can get a bit more detail, which ups the generation time closer to 40 seconds per image. Here's an example:

Prompt: a sleepy man getting his first cup of coffee in the morning coffee shop, laptop

Wow. DALL-E 3 generates images I'm mostly pleased with on the first try. Compared to many other text-to-image generators, I need to prompt less here. The overall quality of images is far superior.

Let me try one more with the settings cranked to high (1024*1792).

Prompt: a woman building a complex machine in a factory while listening to music

Well, it could be better, but I'm nitpicking. The audio wire from the headphones looks off. The fingers, however, are spot on. You can immediately tell it's generated with AI, though. And that's one of the shortcomings of DALL-E 3 - it's up to your prompt to tweak the image. You cannot use other tweaks you would usually have with text-to-image generators.

A bit more about the pricing

I'm still a bit confused with the pricing, though. The Azure Pricing page lists the price I wrote above - about 1.8 euros per 100 images. But why can I choose between small, medium, and large images? And I can crank up the details - which seems to double the processing time also - wouldn't this affect the cost?

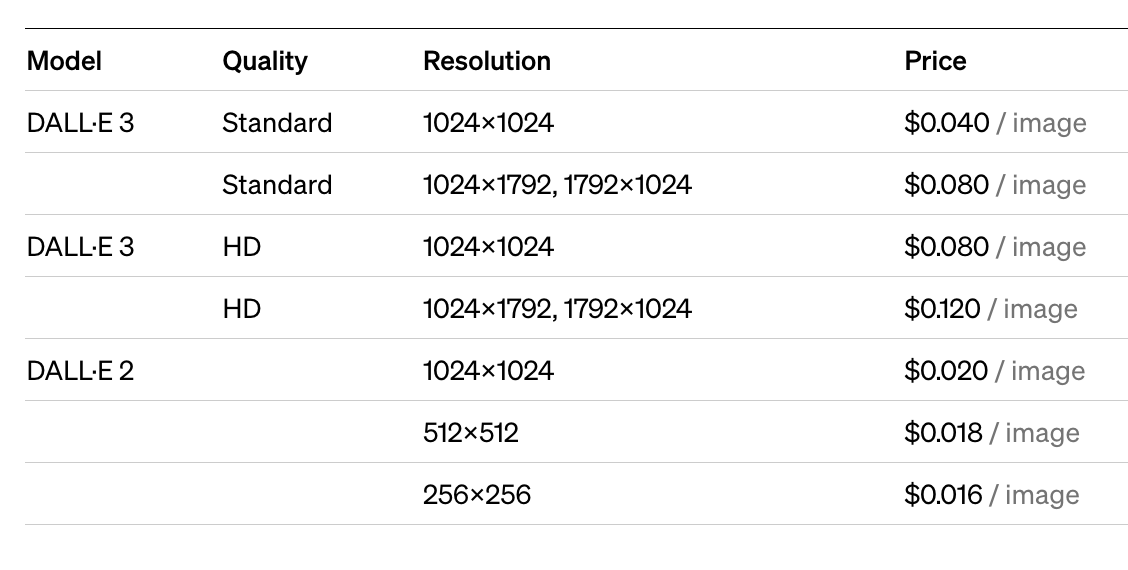

Let's compare to OpenAI pricing for DALL-E 3:

Well, it's a different story. Standard images are about 4 cents each, while HD images are up to 12 cents each. That's about six times more than I had in my "calculations"!

Microsoft announced DALL-E 3 availability in November during Ignite 2023. I vaguely recall seeing a note somewhere that the pricing follows with OpenAI pricing; thus, I feel the Azure Pricing page relates to the old DALL-E 2 prices (as that would align with the OpenAI pricing page now). It is safe to say that generating images with DALL-E 3 will thus set you back between 4 and 12 cents/image.

Yesterday, I had a cup of cappuccino in a café here in Helsinki. A regular cup was 4.4 euros. It was either that or about 100 images generated with DALL-E 3. But it was a good cup of cappuccino.

What about content safety?

I did hit the obvious content safety blockers almost immediately in my tests. I was drawing a blank and asked my 6-year-old to give me a fun prompt. His initial prompt went along the lines of "and a Fortnite character trying to play Spiderman on Playstation and there's Venom and they are shooting webs and swinging around!!!" – and I guess Fortnite was key here. Content safety slaps you on the wrist and tells you to rephrase your prompt.

A friend of mine asked to generate a logo with the following prompt: A fox strangling someone. He had a clever idea here, but I figured that content safety would set boundaries, so I tweaked the prompt to a logo of a fox that is playfully strangling someone. And that worked:

I rarely see someone smiling or laughing when being strangled!

You can create your content filters, but they seem built on top of the built-in content safety filters. So, forget trying to generate those Fortnite LEGO models in DALL-E 3!

Using DALL-E 3 in your apps

The model works precisely like other generative AI models in Azure OpenAI. You can call your models through a common REST API.

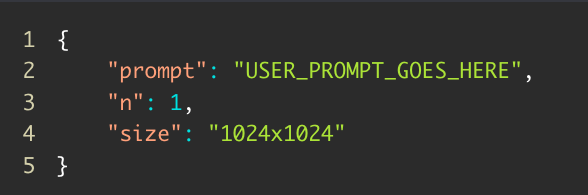

The payload is pretty simple:

And the REST API is https://{your-instance}.openai.azure.com/openai/deployments/dall-e-3/images/generations?api-version=2023-12-01-preview

Once you call this, you're expecting an image back - so it's up to your app to sort out the logic.

A few more images

You can tweak the image output a bit with the prompt. A few examples.

Prompt: an IT nerd in the morning traffic commuting to a customer site. Watercolor painting style.

And another:

Prompt: fortnite in LEGO

I'm surprised "Fortnite" worked this time. It's more LEGO than Fortnite, though.

One last one:

Prompt: playing Nintendo at home, pixel art

Ah, it brings back memories from the early 1990s with the 8-bit Nintendo! :)