Building and using a Machine Learning model for Power Platform using Lobe

About a year ago, I wrote about this fantastic new app called Lobe. It’s an app that you install locally and then use to create a Machine Learning model elsewhere. It’s pretty great, as it takes away all the hassle of utilizing Machine Learning anywhere. The limitation of my previous post was that exporting and embedding the model elsewhere was somewhat challenging. You’d have to dive deep into TensorFlow or create a custom web app from scratch to use your model. I trusted this challenge would resolve itself, and in a way, it did with the latest update to Lobe.

What has changed?

Lobe has seen a few significant updates in the past year. One of those is from November 2021, when support for exporting your Lobe-based models directly to Power Platform was announced. Through this, you can create your Lobe models, and with a click of a button, publish those directly to your Power Platform tenant. To put this more technically, you publish a Machine Learning model to utilize with AI Builder, a service within Power Platform. You can then use your Lobe-based model in Power Automate and Power Apps, for example.

Let’s do this! First, create a model.

To try this out, download Lobe first. Once installed, run it on your local machine.

You’re then going to need images for your model. Decide what you want to train the model for. Previously, I used Lobe to build intelligence to detect different gym weights (plates) based on their color. The more images you have, the better your model will be. If you want to get a model up and running, I recommend using the sample imagery from Microsoft to detect different fruit. Download the .ZIP package here: https://aka.ms/fruit-objects

The images include a banana, an orange, and an apple. Some photos have a combination of these, and this will be slightly trickier with Lobe as it cannot detect multiple elements (at least during the current beta). You should use Microsoft’s Custom Vision service for a more detailed model.

Once Lobe is running, drag-and-drop all the images from your local disk to Lobe under Label:

And then click through each image to label them. I suggest using proper labels, such as orange, apple and banana. This makes it easier to distinguish the set later if you add more images.

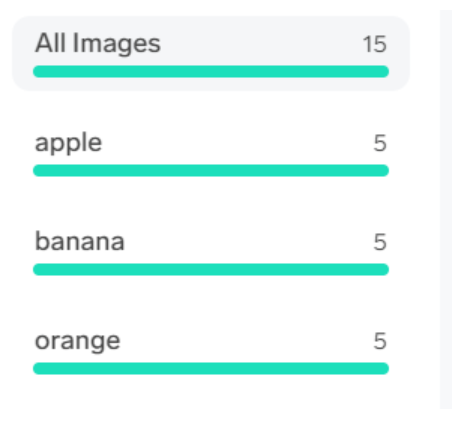

I discarded all images with multiple objects to make this easier to follow. I ended up with 15 images from the Microsoft sample image set:

This is still suboptimal, as I need a few more images of orange to complete the model. When you click train, Lobe will kindly poke you for missing images. I found out that just adding one more picture of orange provides me with enough data for this simple model. For actual production use, you’d perhaps want dozens or hundreds of images to make the model efficient.

Training of the model occurs automatically each time you add and label new images. This takes only a second on my workstation, but with larger datasets, you will need to wait a bit longer. You can then test your model by clicking use and providing an image outside your dataset. Each image you experiment with can then also be added to your dataset.

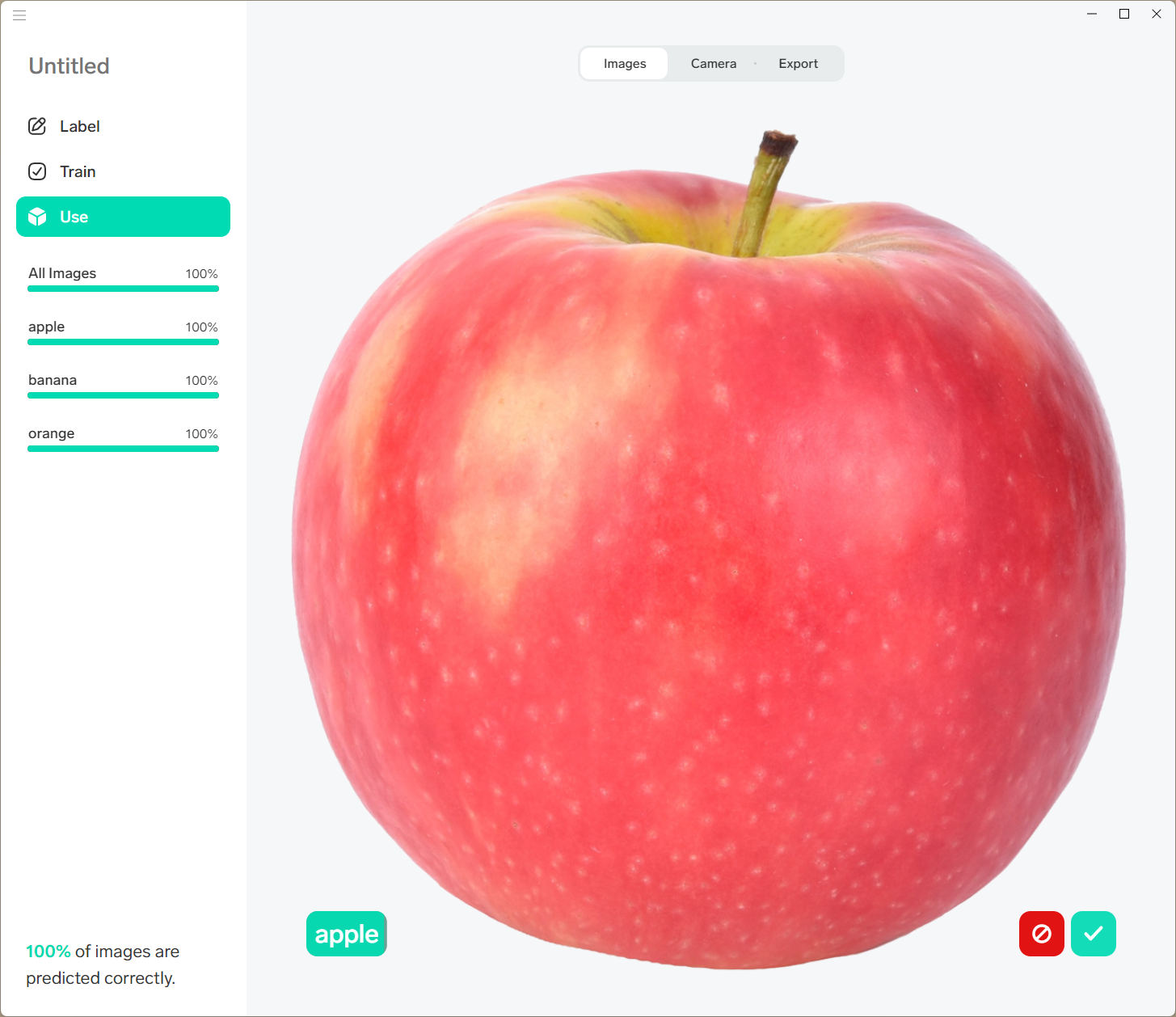

I tested my model with this sample image of an apple:

Lobe seems to detect it correctly:

I can now use the little green checkmark to approve this detection and add the image to my dataset. You’d use your images within the dataset.

Exporting the model to Power Platform

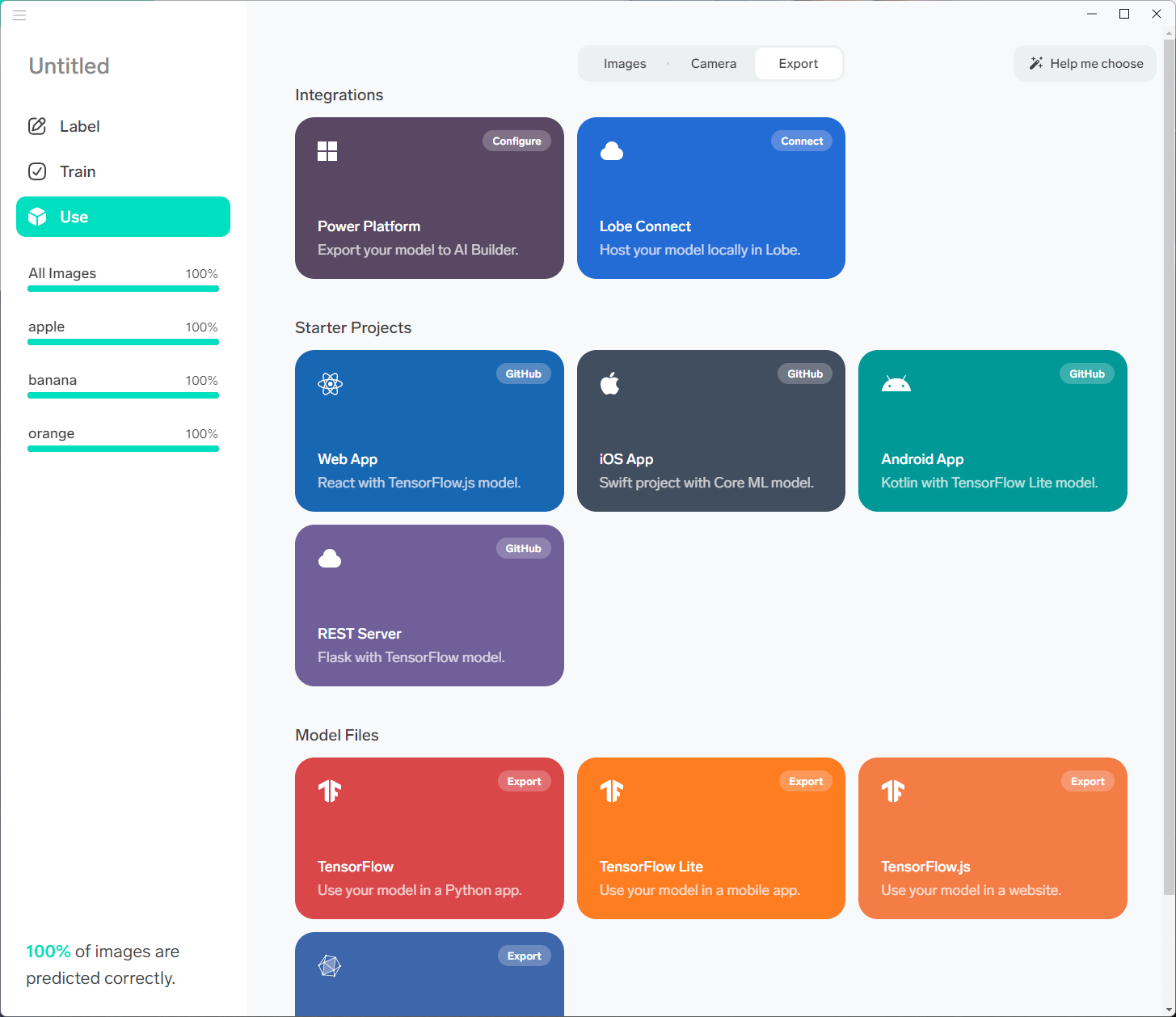

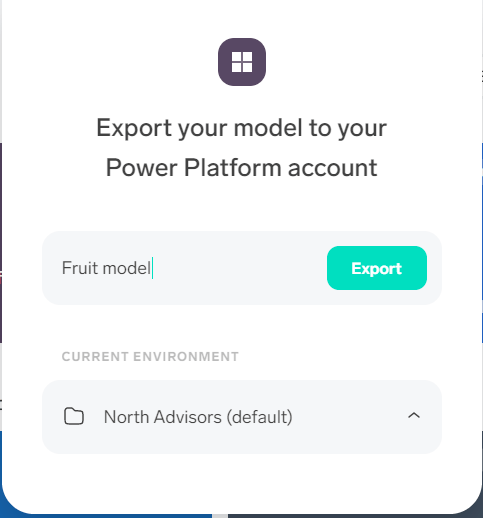

Now that our rudimentary model is done, it’s time to export it to Power Platform. Within Lobe, click. use > Export. Select Power Platform:

Once you’ve logged in, you can choose which environment within your Power Platform tenant you’d like to use.

You can then export (or optimize & export, which is recommended) the model directly to that environment. This takes about a minute with the simple model.

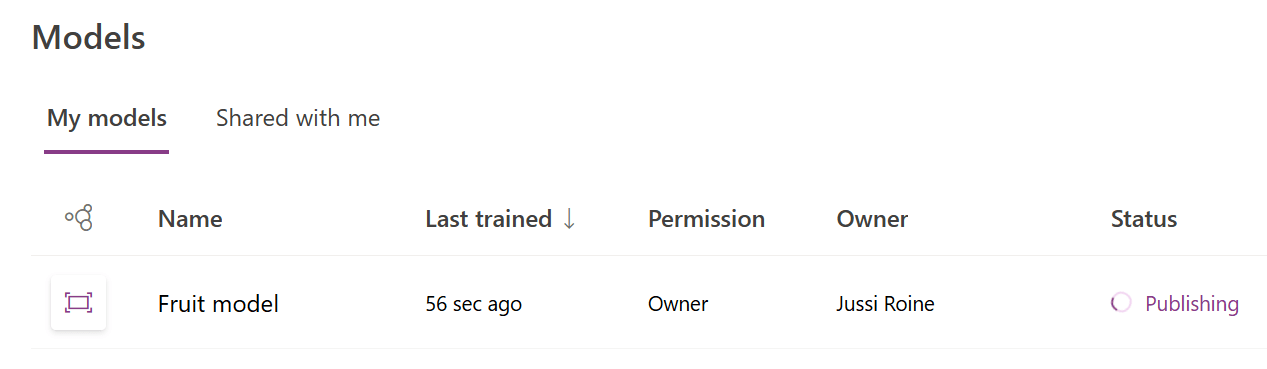

Next, open https://make.powerapps.com/, login, select the correct environment, and then view AI Builder > Models to verify your model is there.

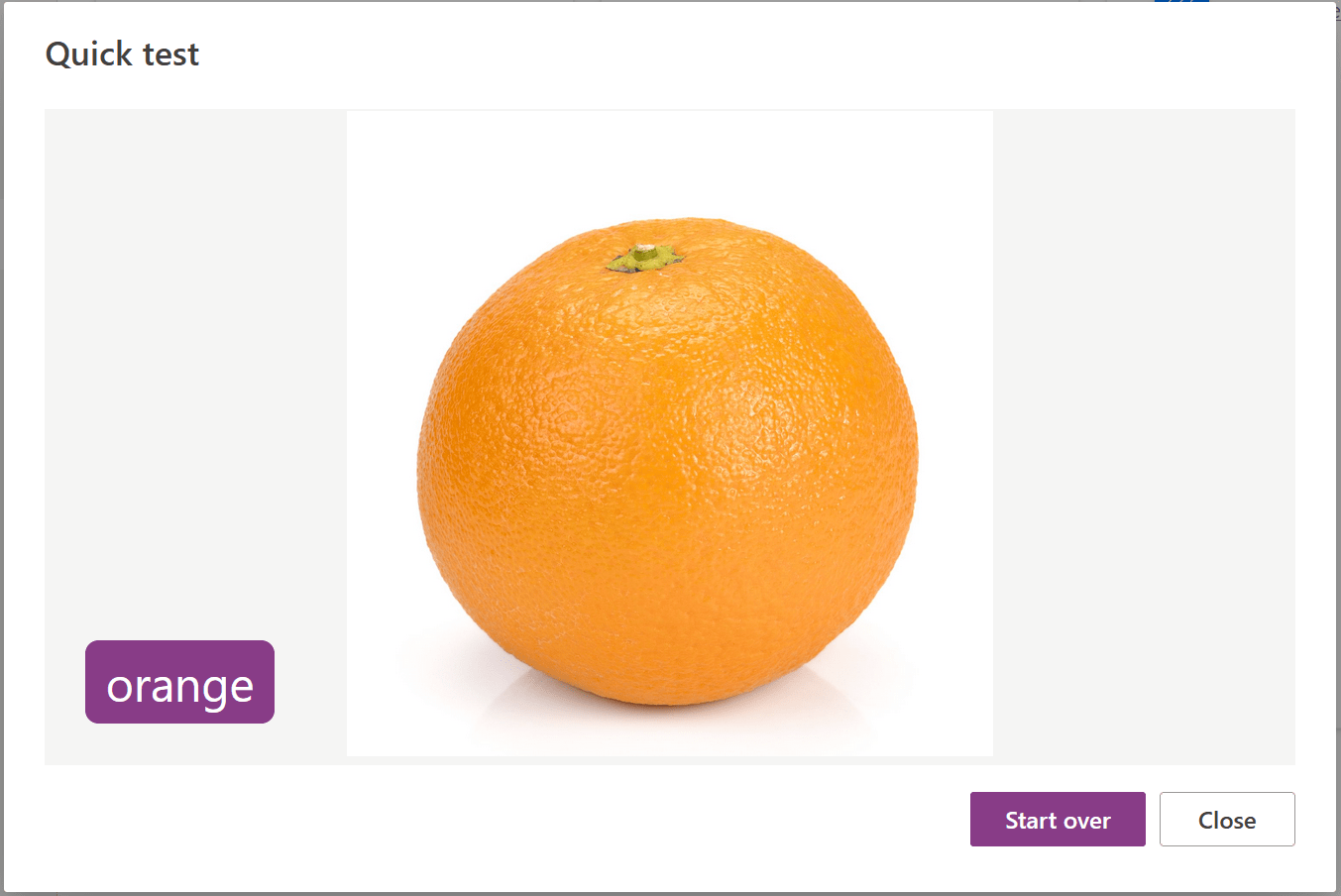

The model publishing will continue and take a few more minutes to complete. Once this is done, you can try out the quick test within the model. Upload an image to check that this works. I used another sample image (not part of the dataset of the model) to verify my model is still working:

Yep, it sure seems to work with the quick test within Power Platform! The last thing to do is to build something that utilizes this model.

Building a Power Automate to utilize the Lobe-based model

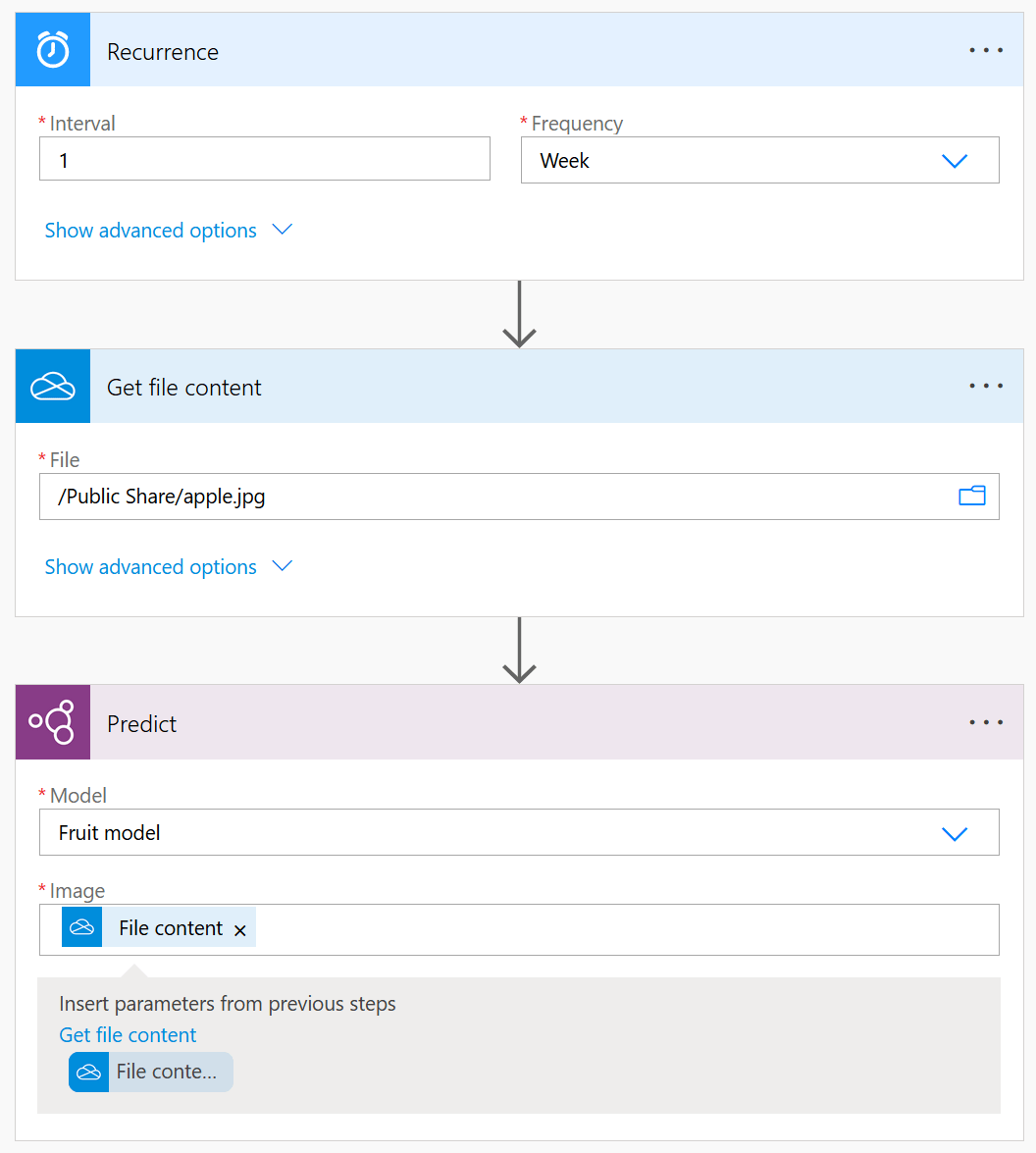

First, I prepped yet another test image to try out. I saved that to my OneDrive for Business, as it’s so easy to pick up files in the cloud from there.

The Power Automate can be any type, and the easiest is perhaps a scheduled one. The end-to-end solution looks like this:

It picks up a sample image from OneDrive for Business after executing the automation. You could replace this with anything else – perhaps picking up files from Azure Storage or a database, for example. The key is then to use AI Builder > Predict capability. This allows you to select your custom AI Builder model, which in turn requires the image file (which you can now pass from OneDrive for Business).

That’s it. When you run this in a test, you see the predictions. My test image was of an apple, and the results are:

- Prediction: apple

- Confidence: 0.9999663829803467

You could now easily pick up the prediction, verify it’s close to your comfort levels (such as >0.90), and act accordingly.

In closing

Lobe is insanely good, and with the ability to now export models directly to Power Platform, it’s filling in the gap. Keep in mind that this ability to utilize AI Builder and the Predict feature requires an additional license in Power Platform.