Replicating files between two Azure Virtual Machines: Considerations and options

Recently, I had a request from a customer. They have a design where two virtual machines are needed in Azure. One is aimed to be used in a classic “DMZ-style” approach, where select endpoints are exposed for select external users (just plain old HTTPS traffic). The other VM is an internal one that holds certain key data the other VM needs to access. The primary reason for this setup is because the two servers will run some proprietary non-cloud-native software. Server A is the internal one, and server B is the external-facing one.

The need is to copy – frequently – files from \\A\d$\temp to \\B\d$\temp. The files are randomly named but follow a fairly logical naming convention. Server A will generate these files, and server B will then serve data based on these files to external users (not directly, but through a web app).

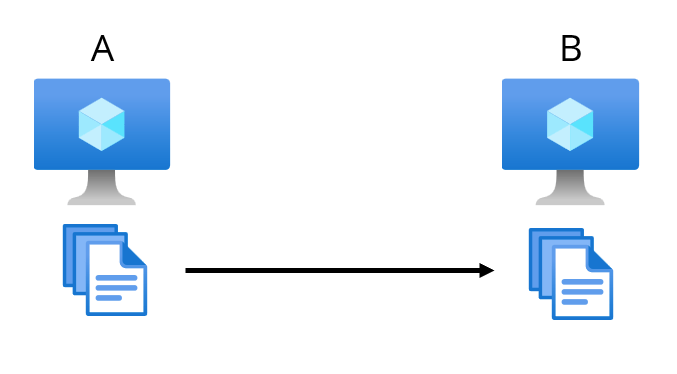

Here’s a logical diagram of the requested solution:

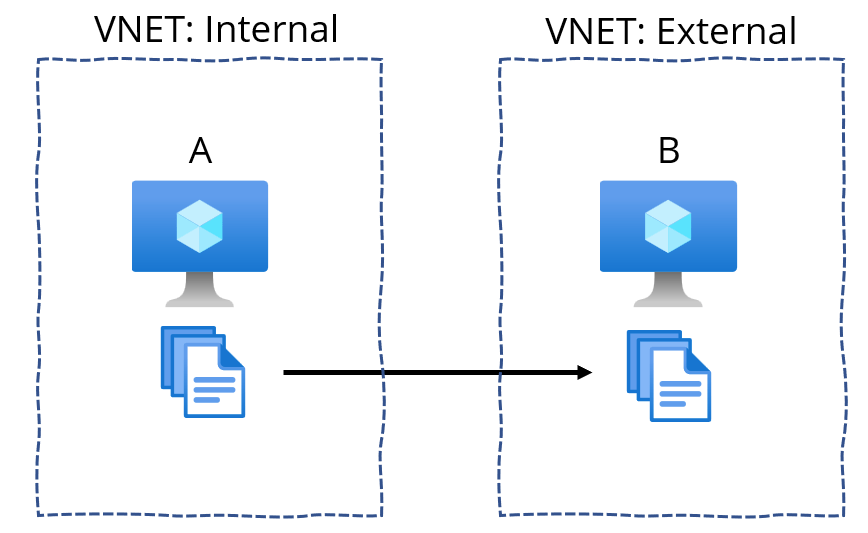

Both servers (A and B) are Windows Server 2016, and they are configured as the B2ms -class VMs (2 virtual CPUs, 16 GB of RAM, and very affordable to run). At this point, it was unclear how the two servers are connected. Later I found out that server A is in a different Virtual Network from server B:

Thinking about the options

First things first – I don’t want server B to fetch anything from server A. This would require me to open traffic on the VNET-level towards the internal services. That Internal VNET is communicating quite actively to other internal services in Azure and on-premises via a dedicated IPSec tunnel.

Ideally, server A needs to push whatever it generates to server B. I quickly listed my options. And while doing this, I also internalized that this is a one-time solution that won’t run forever – so no point in building something that will take days to configure.

Thus, the options:

- Schedule a simple XCOPY-style script on A, that copies files to B (and allow traffic from the source IP of A towards B)

- Create a Logic App that pings A, picks up any files, and copies them to B

- Create a WebJob that does the same, but perhaps compile as .EXE

- Use Azure Automation that does the same

Option #1 is the classic IT Pro solution. It “just works,” but I’d still like to avoid direct traffic from A to B. Options #2, #3, and #4 are virtually the same, but using a different engine and thus bypassing the need to fiddle with VNET restrictions.

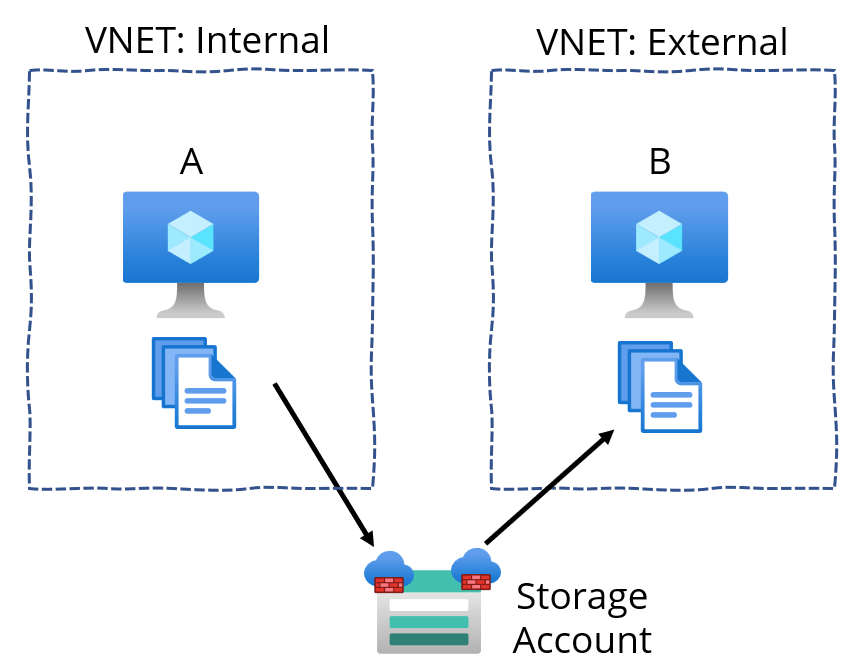

I was about to start building an ad-hoc Logic App to try this out, but then I realized there is another option (and obviously, plenty of others I’m not listing here). What if I add a dedicated Storage Account and use the firewall option to only allow traffic from the two VNETs? This way, server A could copy the files as Blobs, and I could also more easily monitor the process through metrics and alerts.

Redefining my earlier diagram with this option:

Testing the Storage Account-based option

I started to like this approach. The Storage Account provides more flexibility, and it seemed the built-in firewalling is an easy way only to allow traffic from the two VNETs, while not allowing direct VNET-to-VNET traffic.

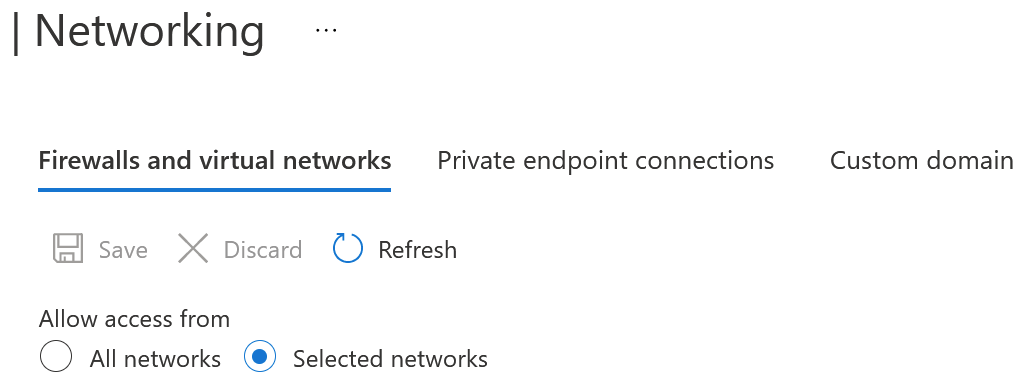

Testing this was embarrassingly easy. I provisioned a new Storage Account and under Networking select Allow access to the two VNETs:

I then listed the two VNETs. Once I created a file share on the Storage Account, I could mount (map) the Storage Account as a network drive. Azure Portal even generates a pretty decent PowerShell script for this, which makes the drive persist through reboots.

Closing thoughts

Someone reading this might be going, “but Jussi, why didn’t you utilize a {fancy yet modern and expensive solution} here?” Perhaps that would have been fun, but the intention here was to build a solution that’s easy to maintain and even easier to remove once it’s no longer needed. It’s also very cost-effective, as the files are tiny in comparison.