Getting started with Azure Database for PostgreSQL

For as long as I can remember, the default datastore I’ve chosen has always been SQL Server, or Azure SQL depending where I need to deploy my solution. In recent years, I’ve started to expand my understanding of other platforms – including Mongo DB (see my adventures with containers and Mongo DB here), Azure Table Storage (a nice story here on how that ended up) but I’ve yet to try out PostgreSQL. I think I’ve installed it perhaps once or twice for on-premises in 2008.

What is PostgreSQL?

It’s a free relational database platform, that has an elephant as their icon.

![The World's Most Advanced Open Source Relational Database[1]](https://upload.wikimedia.org/wikipedia/commons/thumb/2/29/Postgresql_elephant.svg/220px-Postgresql_elephant.svg.png)

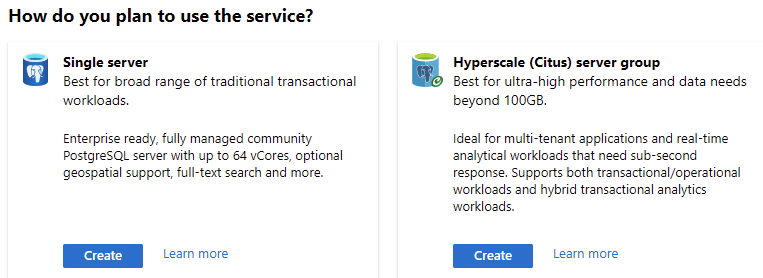

It also has a long history, starting from 1996 and it’s still being developed and updated. Based on Stack Overflow’s yearly developer survey, in 2019 PostgreSQL was the second most popular database solution:

MySQL was still number one, and PostgreSQL squeezed in just in front of Microsoft SQL Server.

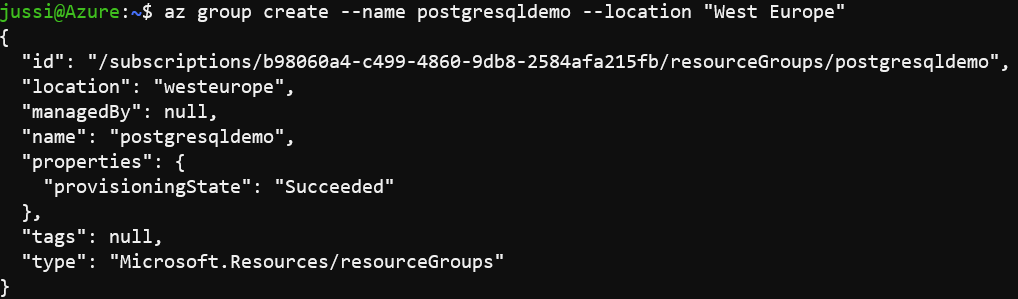

PostgreSQL is available for Azure, since May 2017. It comes in two editions:

A single server is, well, a single server for the usual needs. Hyperscale (Citus) is a group of servers with high availability and high throughput. For Hyperscale (Citus), database size can grow beyond 10 GB, and query latency of 100 ms or less is possible.

You can find all documentation for Azure Database for PostgreSQL here. To download them all as one big, searchable and portable PDF, click here.

Provisioning Azure Database for PostgreSQL in single server setup

The best way to learn is often doing. So I opened up Windows Terminal and then Azure Cloud Shell with Azure CLI to see how I can provision Azure Database for PostgreSQL through the command line.

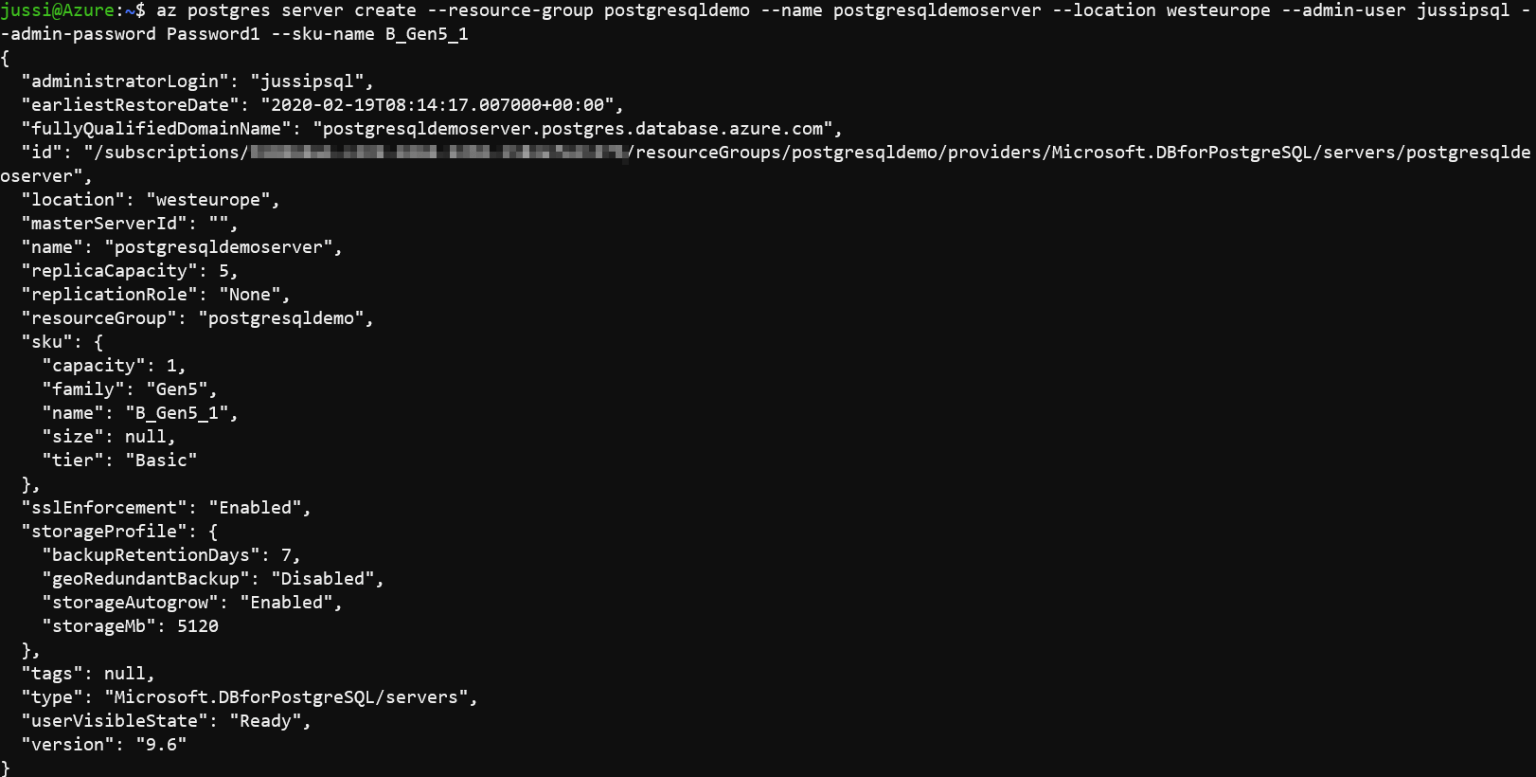

To begin, I’ll create a new resource group to hold all my assets:

az group create --name postgresqldemo --location "West Europe"

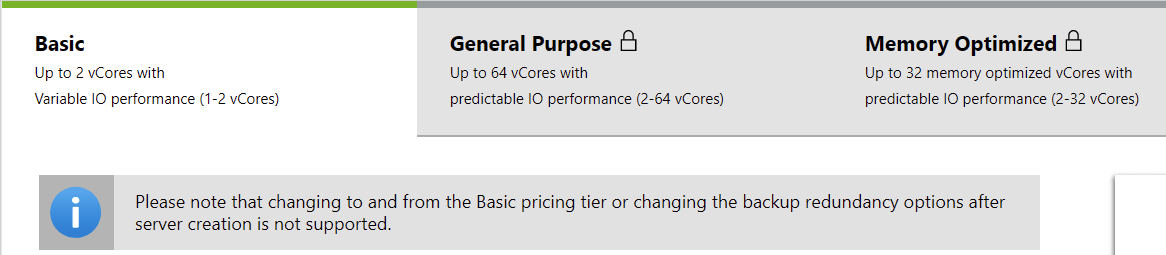

And then it’s as easy as creating the instance for PostgreSQL. I do need to specify the compute generation, which defines the performance for my instance. Available options include Basic, General Purpose and Memory Optimized families, and all of these have Gen 4 and 5, expect Memory Optimized which only has Gen 5. To understand which compute generation to choose, I’ll need to check the pricing also. The bulk of the cost is formed from the compute, and additional costs are incurred with storage and backup. Check the up-to-date pricing here. At the time of writing, the rough price points per month are:

- Basic, Gen 5: 24-49 € ($25-$52)

- General Purpose, Gen 5: starting from 86 € going all the way to 2783 € ($92 to $3000)

- Memory Optimized, Gen 5: starting from 115 € going all the way to 1855 € ($124 to $2004)

I’ll start with the cheapest option, as I don’t know any better what sort of performance I’ll need – thus, I’ll select Basic, Gen 5.

To provision a new PostgreSQL instance using the Basic, Gen 5 (1 vCore option), run the following command:

az postgres server create --resource-group postgresqldemo --name postgresqldemoserver --location westeurope --admin-user jussipsql --admin-password Password1 --sku-name B_Gen5_1

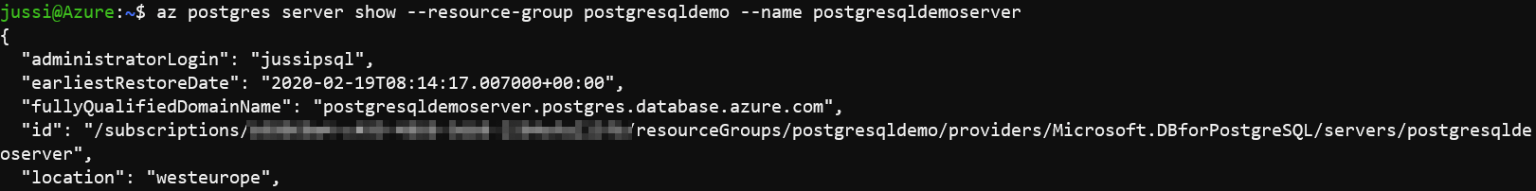

This took about two minutes to complete. I can now verify the instance has been provisioned:

az postgres server show --resource-group postgresqldemo --name postgresqldemoserver

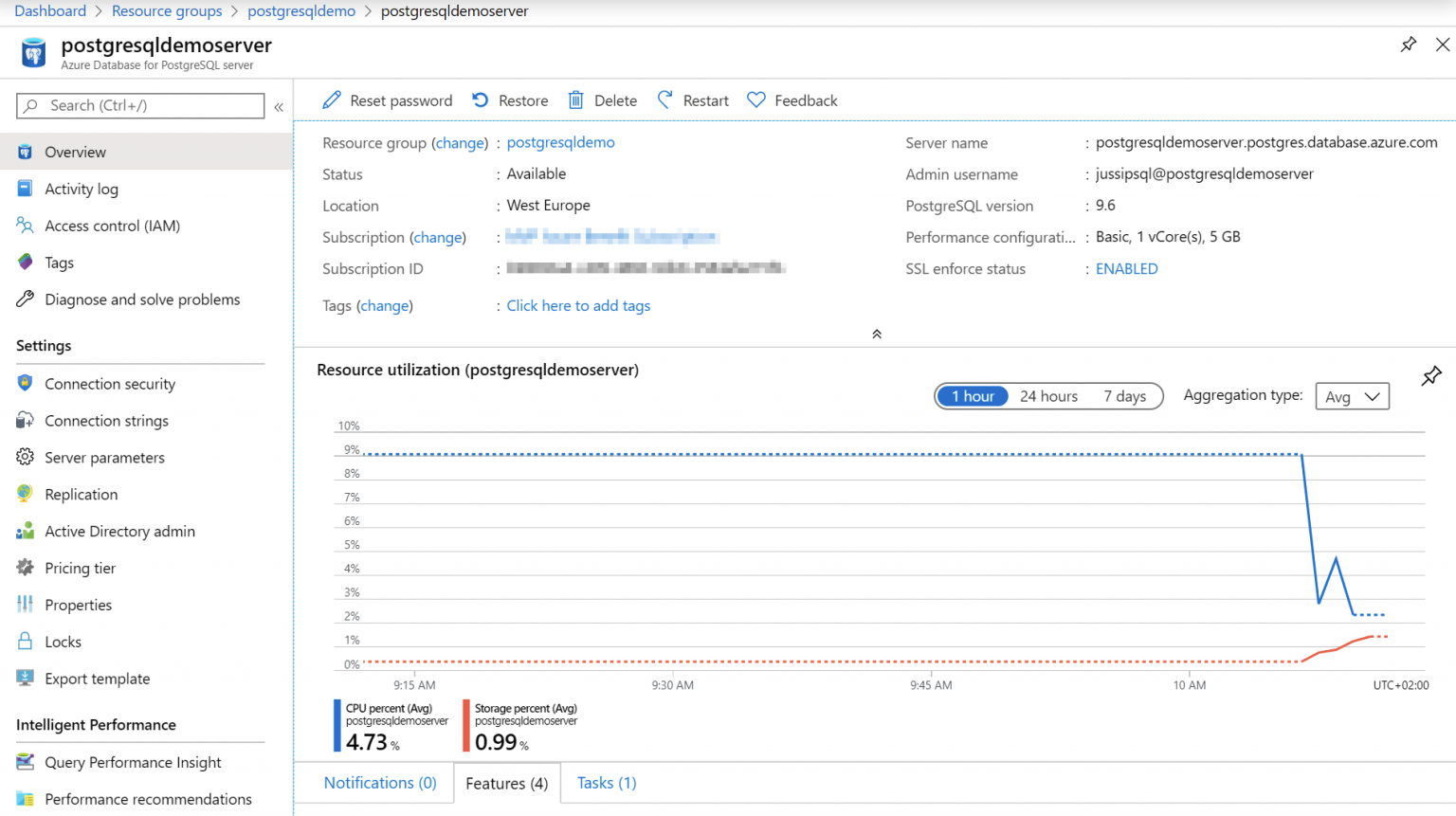

To view the instance through Azure Portal I get a better understanding of the available options and configuration settings.

It’s worth noting here that scaling up from Basic to General Purpose or Memory Optimized is not supported. You can scale between General Purpose and Memory Optimized.

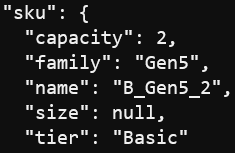

To change any of the settings through Azure Cloud Shell (and Azure CLI), use az postgres server update. As an example, if you want to change from Basic, Gen 5, 1 vCore to Basic, Gen 5, 2vCore, you’d run:

az postgres server update --resource-group postgresqldemo --name postgresqldemoserver --sku-name B_Gen5_2The –sku-name is formed using the following shorthand: {pricing tier}_{compute generation}_{vCores} – thus, Basic, Gen 5, 2 vCores becomes B_Gen5_2. Once scaling is complete, the resulting JSON will tell you the new pricing tier:

Managing Azure Database for PostgreSQL using Azure Portal

Now that we have our PostgreSQL instance running, it’s time to take a quick lap around the tools we have at our disposal for managing PostgreSQL. Open Azure Portal, and navigate to your PostgreSQL instance first.

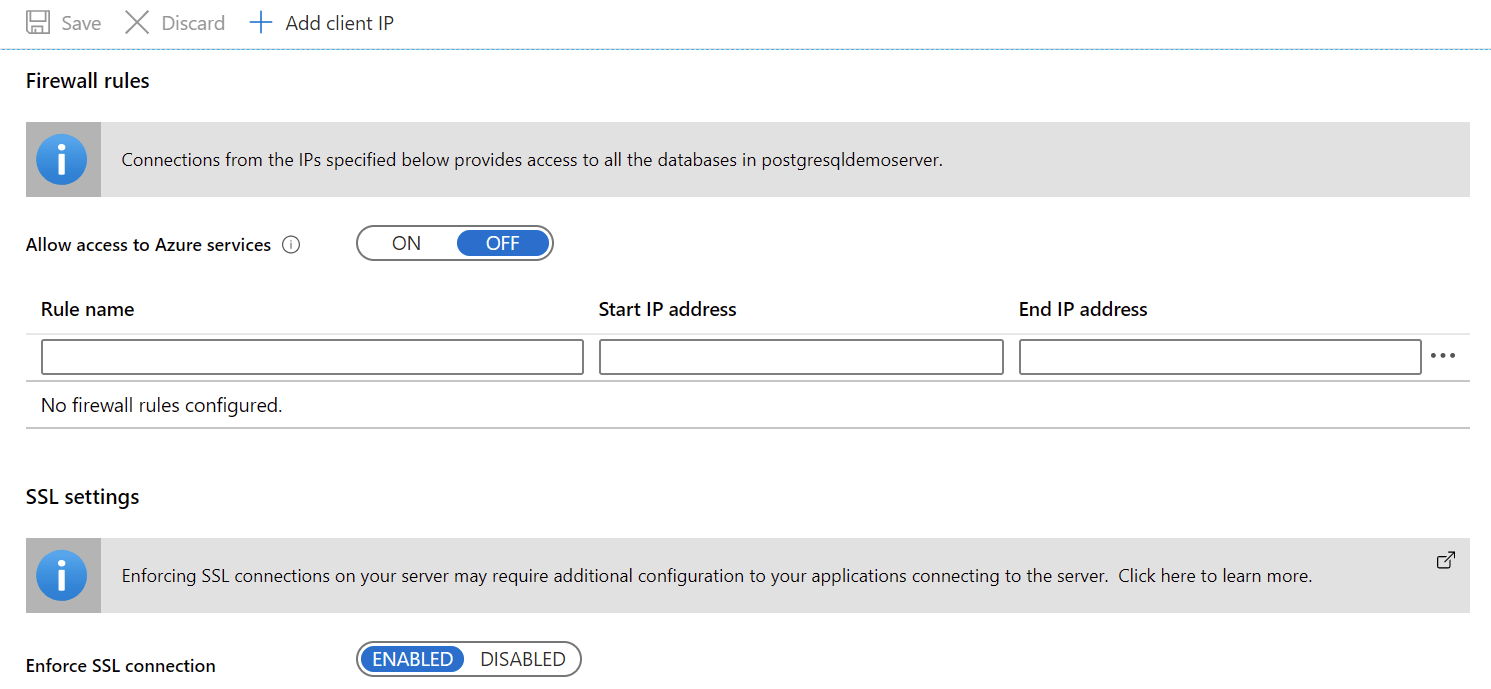

Firewall rules are enabled by default for PostgreSQL, so it’s often a good idea to add your IP address as an allowed IP, should you want to connect with the instance remotely. You can do this under Connection security, and then clicking on Add client IP in the top navigation. This is also required if you plan on using psql or similar command-line tools to manipulate your databases.

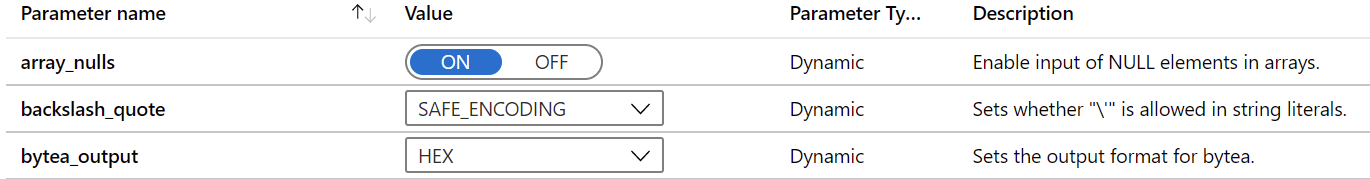

Under Server parameters, you can manipulate all the dozens of server parameters PostgreSQL exposes for you. This is obviously also possible through Azure CLI and the command line with az postgres server configuration set.

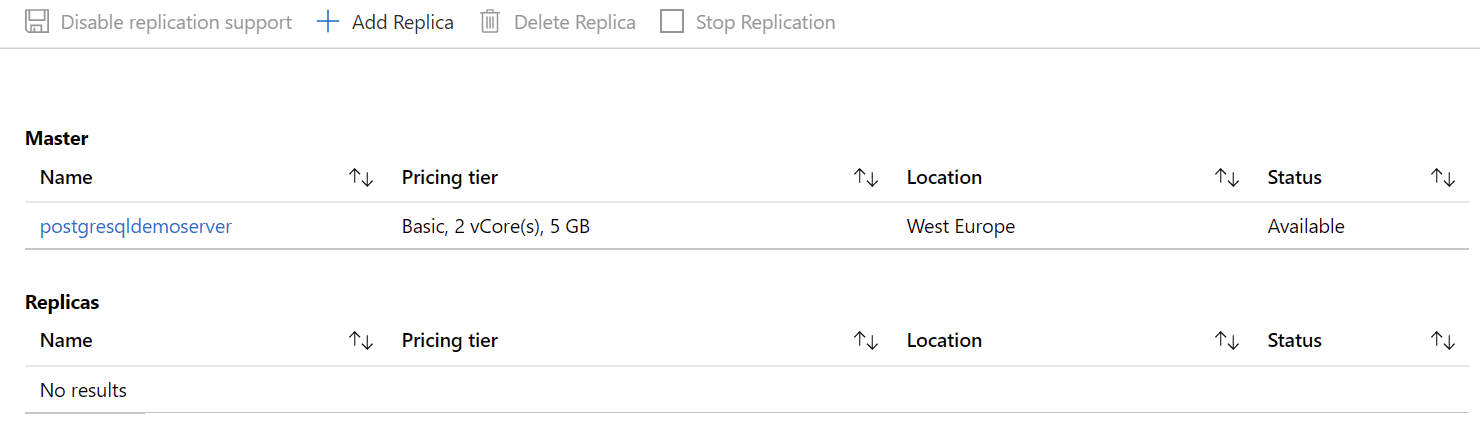

Under Replication, you can add replicas to your PostgreSQL instance. This obviously requires you’ve provisioned another instance somewhere – preferably in different Azure geo.

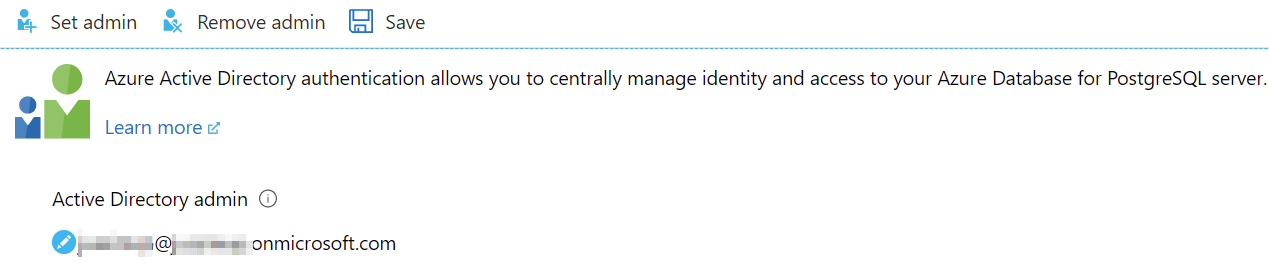

Under Active Directory admin, you can define to use Azure Active Directory identities, as opposed to ‘just’ using PostgreSQL local accounts.

Using psql to connect to Azure Database for PostgreSQL

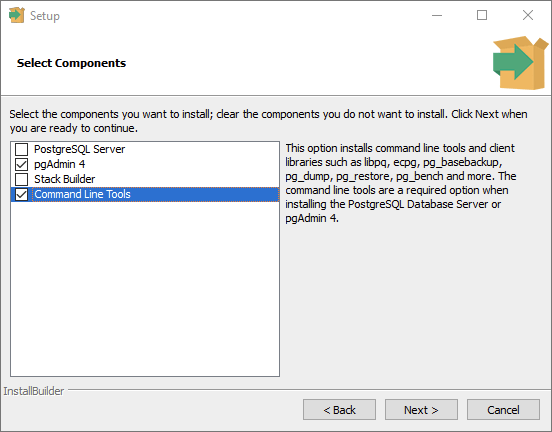

I wanted to create a new database within my fresh PostgreSQL instance. I wanted to try out the native PostgreSQL command-line tools, which are part of the install package (download). As I have no need to install PostgreSQL locally on my workstation, I just chose to install the command line tools, and pgAdmin, which is a graphical management tool.

I’ve never used psql, but as with all command-line tools, it’s a trial and error process.

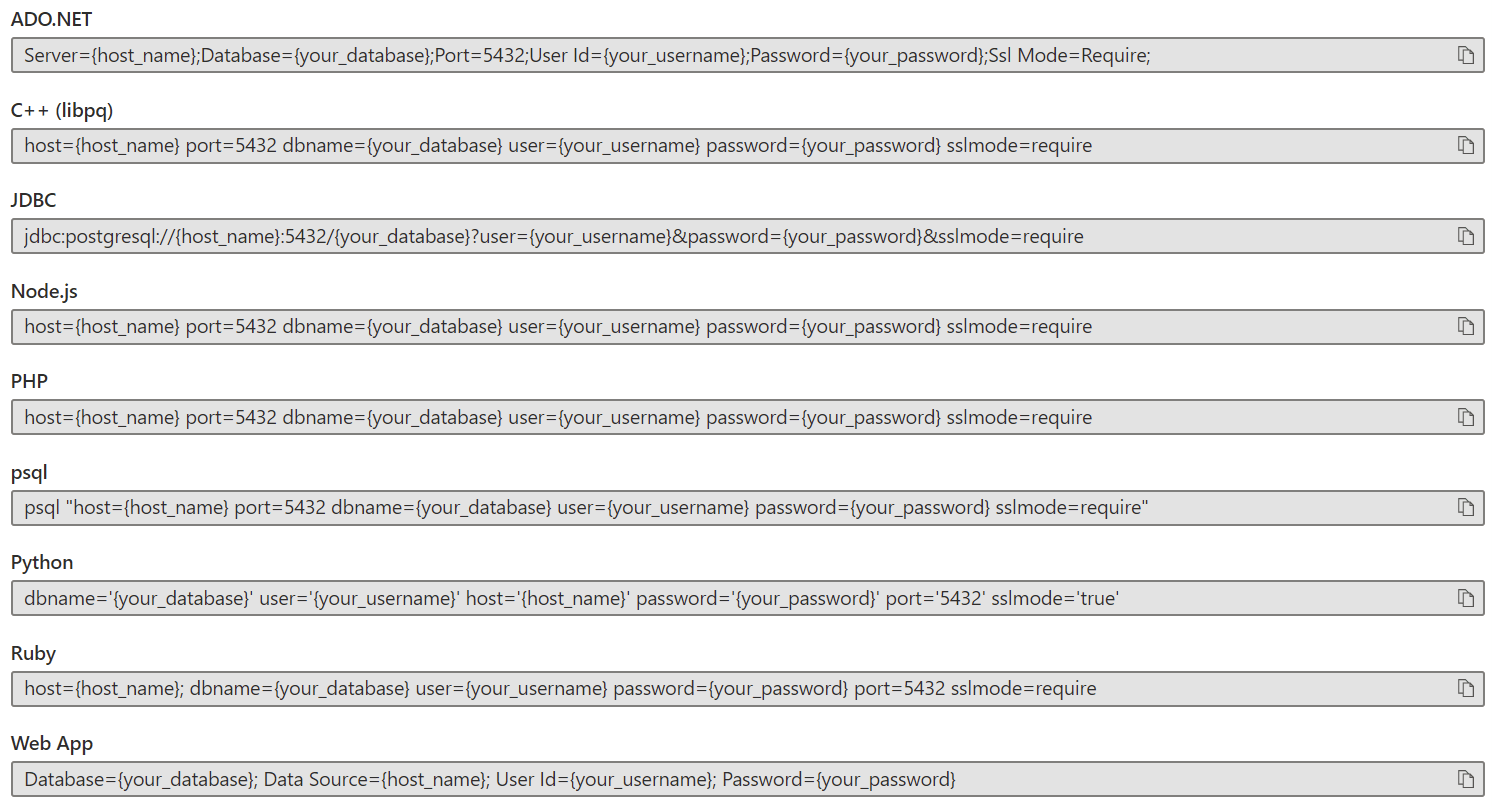

After installation is complete, open Windows Terminal (or just plain old cmd.exe) and navigate to C:\Program Files\PostgreSQL\12\bin. This is where psql.exe resides in, and it’s not in the PATH environment variable by default. To connect with our instance we’ll need the connection string – which is easily visible through Connection strings in Azure Portal.

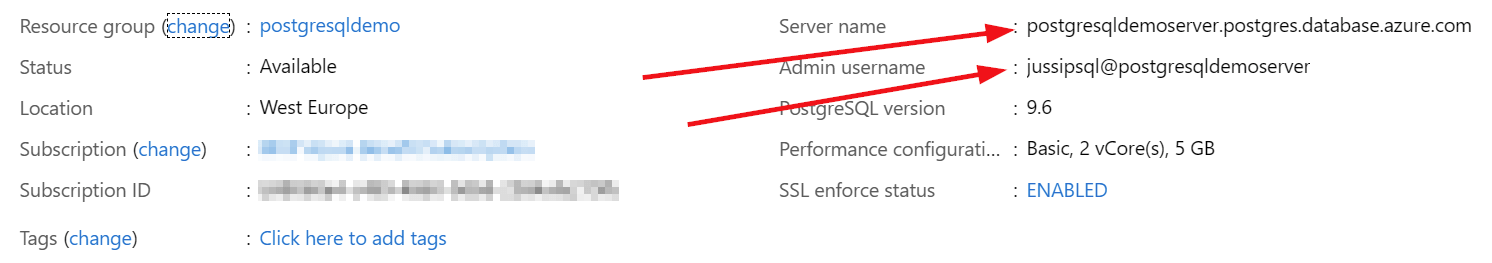

It even has a string crafted for psql! Too bad it’s just a template so I still need to replace the few variables here, which I can fetch from my earlier scripts, or by checking the Overview blade for my PostgreSQL instance.

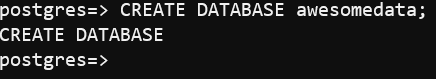

To connect with psql, I’ll thus need to run the following command:

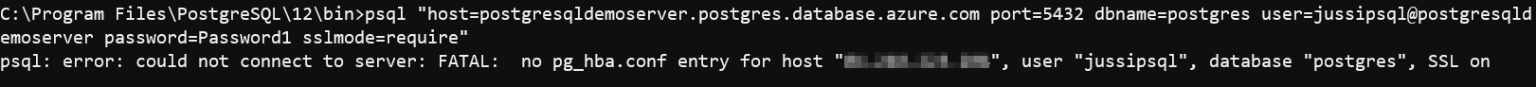

psql "host=postgresqldemoserver.postgres.database.azure.com port=5432 dbname=postgres user=jussipsql@postgresqldemoserver password=Password1 sslmode=require"This fails on the first try, as I didn’t configure the firewall rule for my local IP address.

After fixing this, I can connect with the same command:

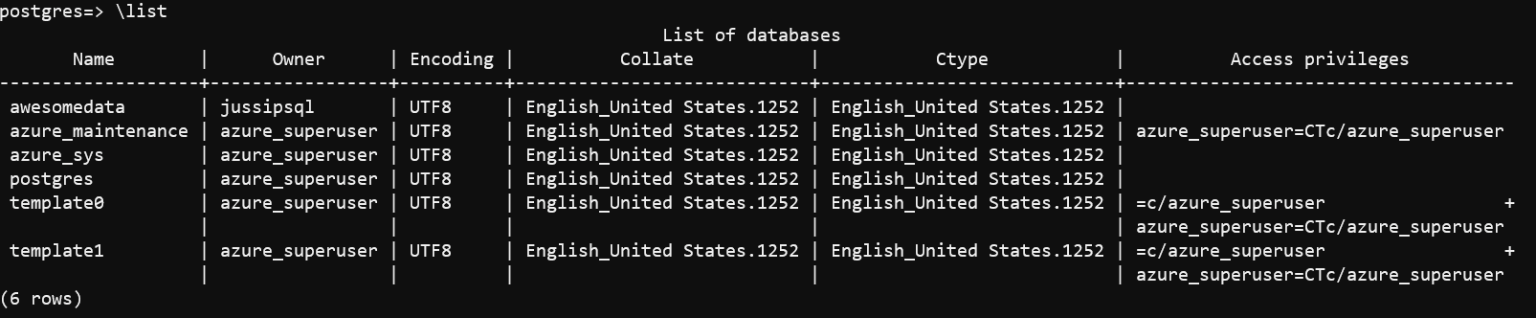

I’ve connected with the default database called postgres. I’ll now need to create my own database, which I shall name awesomedata. To do this, run the following command:

CREATE DATABASE awesomedata;

To list all databases, I’ll use \list

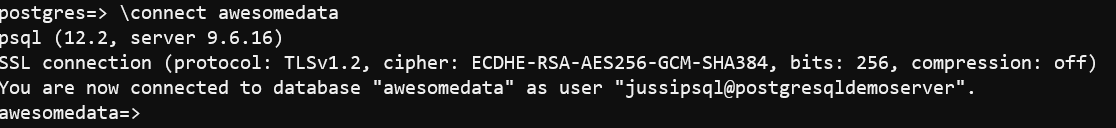

And now I can connect to my new database with \connect awesomedata

The database is obviously empty, so I’ll create a new table, add a new column and add a new row based on this simple schema.

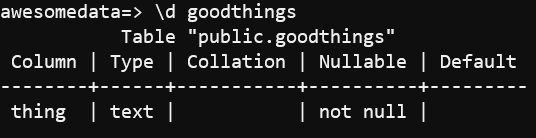

To create the table and a column in it, I’ll use the following CREATE TABLE statement:

CREATE TABLE goodthings(

thing TEXT NOT NULL);

To display my table, I can use the shorthand command \d goodthings:

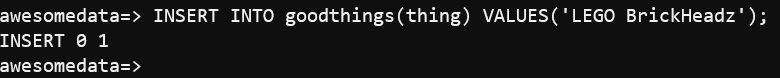

Let’s insert a row in the newly created table:

INSERT INTO goodthings(thing) VALUES('LEGO BrickHeadz');

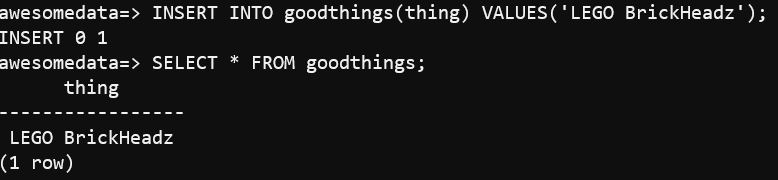

And finally, we’ll verify the data actually committed to the database by querying for it:

SELECT * FROM goodthings;

Monitoring Azure Database for PostgreSQL

Now that we’ve generated some content to our database, and utilized the service a bit it’s time to see how to monitor the service effectively. Thankfully, Azure is pretty great when it comes to monitoring and Azure Database for PostgreSQL leverages these investments automatically.

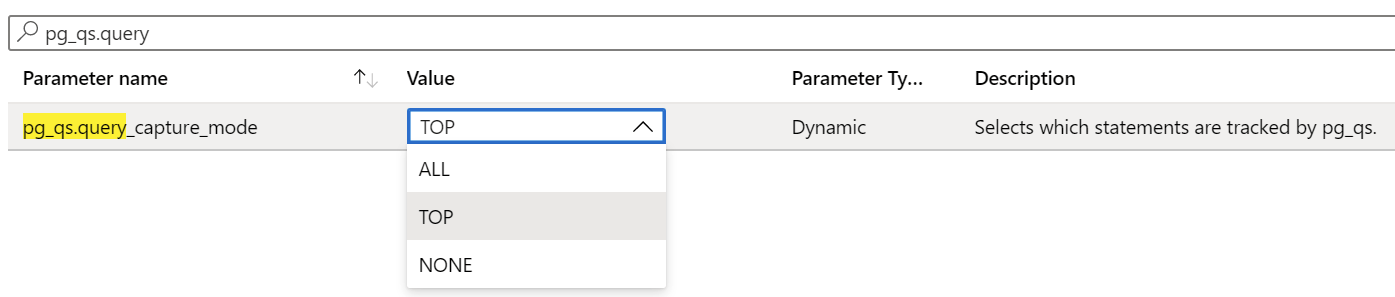

Data collection is not enabled by default, so we’ll enable that first. You can do it easily through Azure Portal, and as it is a one-time setting, it probably makes most sense. You could also use az postgres server configuration set. Under Server parameters, enable pg_qs.query_capture_mode to TOP first. This parameter initiates the collecting of query performance data.

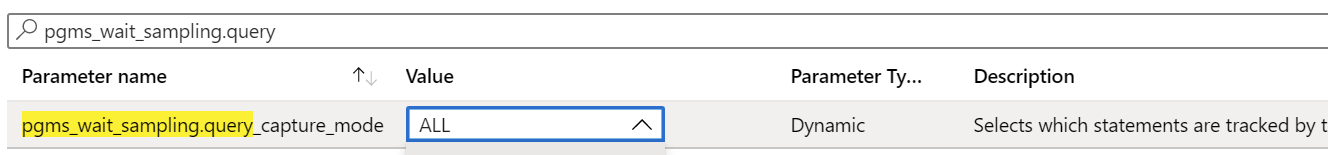

And also set pgms_wait_sampling.query_capture_mode to ALL to start collecting wait statistics:

Remember to click Save in the top navbar!

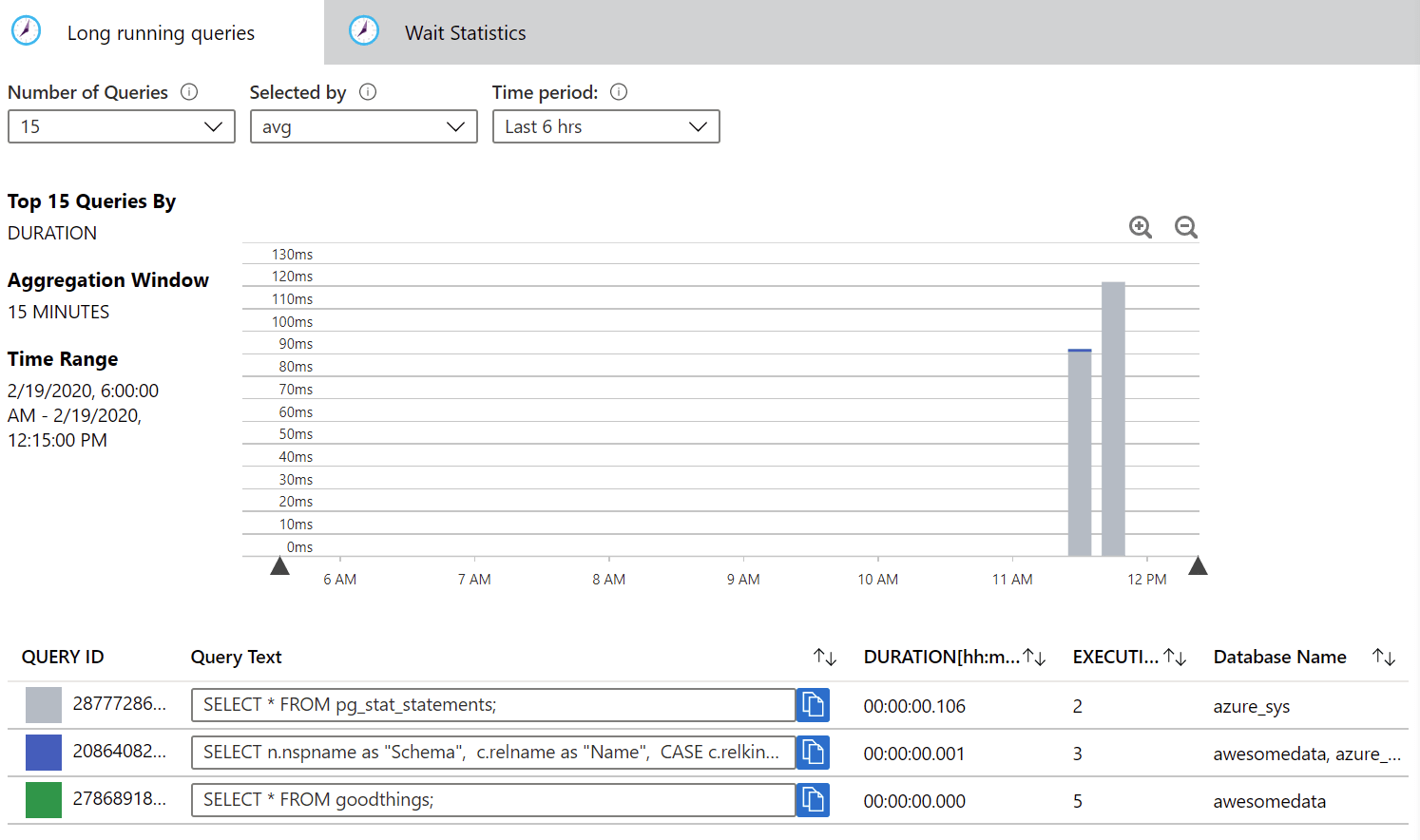

Next, click on Query Performance Insight to view both your long-running queries and wait statistics. These allow you to view the queries to understand more about its performance.

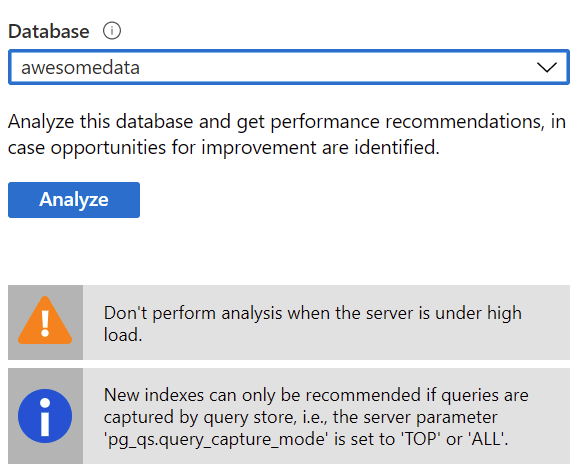

Under Performance recommendations, you can analyze a database to receive recommendations on its performance.

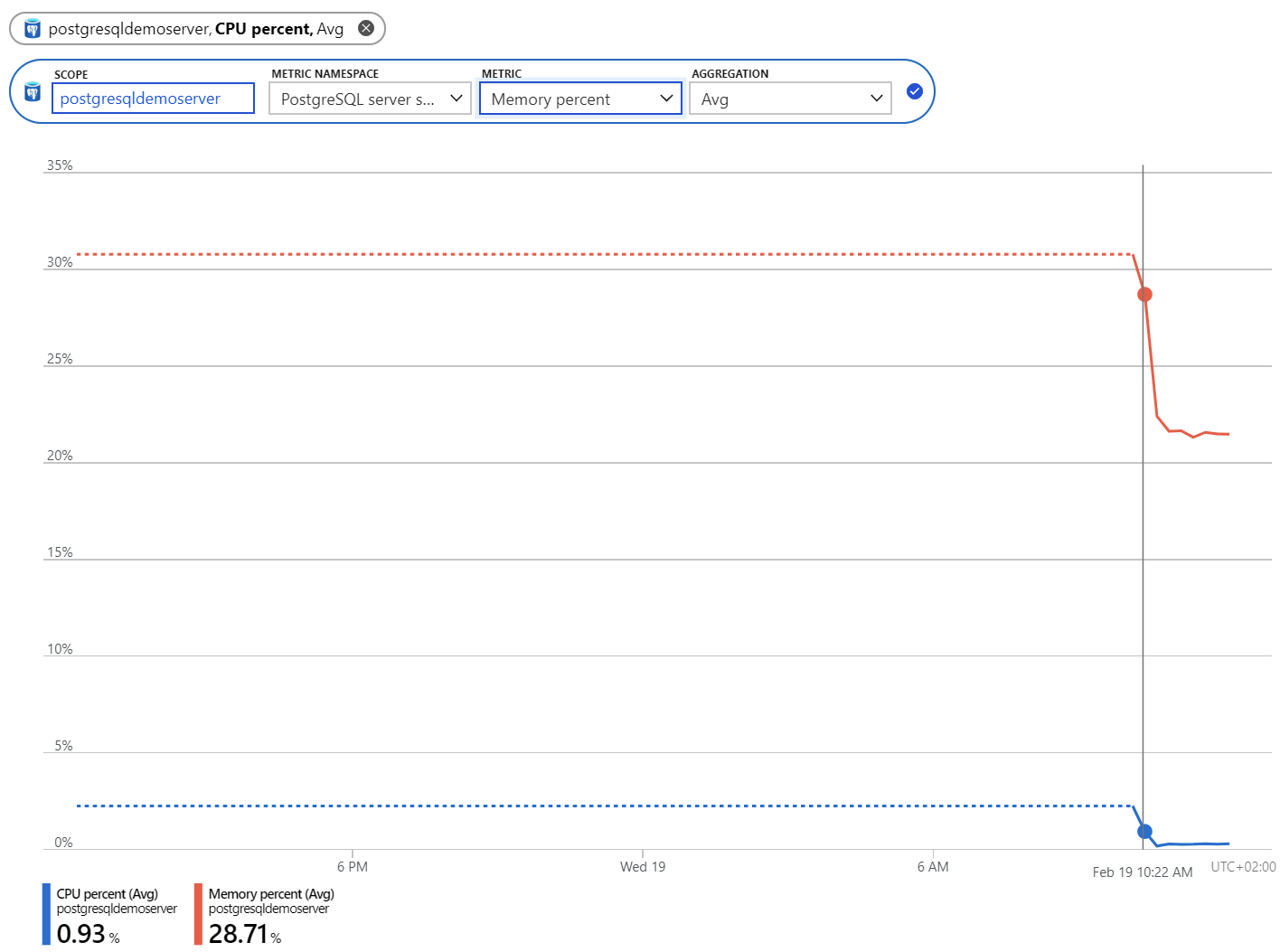

Based on your PostgreSQL instance’s activity you can also view metrics under Metrics. This view allows you check performance data in realtime, and also to set alerts based on a pre-defined criteria – such as CPU load, memory consumption and storage limit.

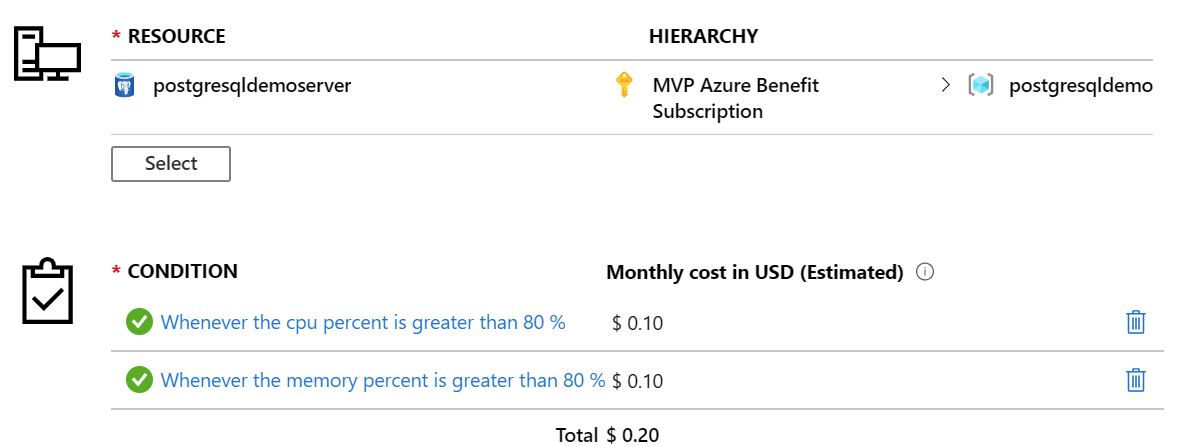

To set an alert, click on New alert rule. The values for the alert are prepopulated based on your previous choices. I’ve added two rules to alert me – when memory or CPU usage is over 80% (over a 5 minutes average), it will trigger an alert.

You can then define an action group, that triggers an action – such as sending an email, filing a ticket or executing a Logic App-based orchestration.

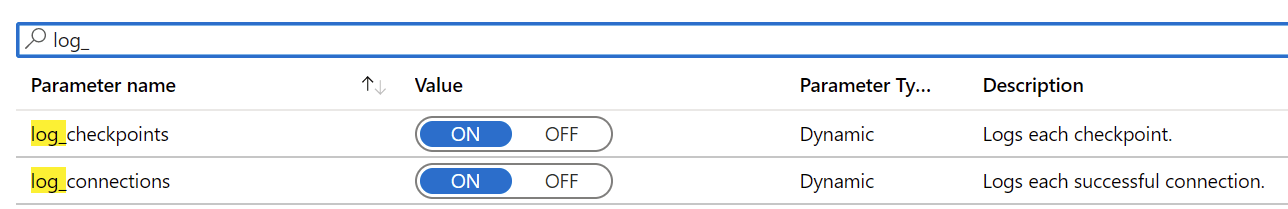

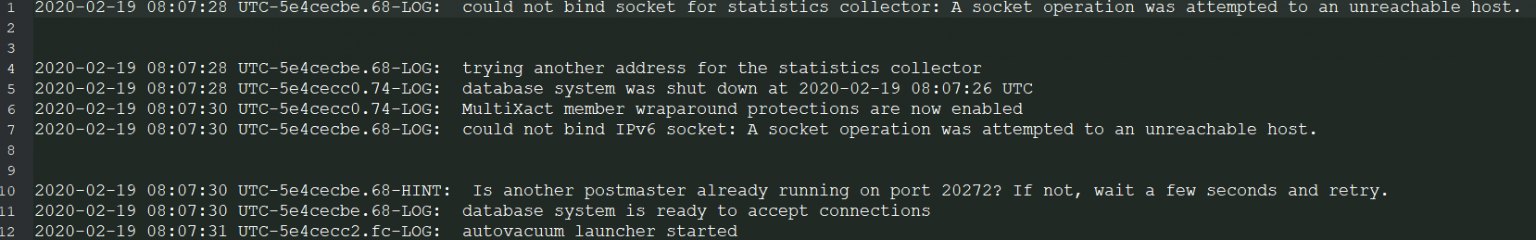

Finally, let’s enable logging so that we can later trace any activity. This is also done through Server parameters, by enabling log_checkpoints and log_connections. By default, these two values should be set to ON.

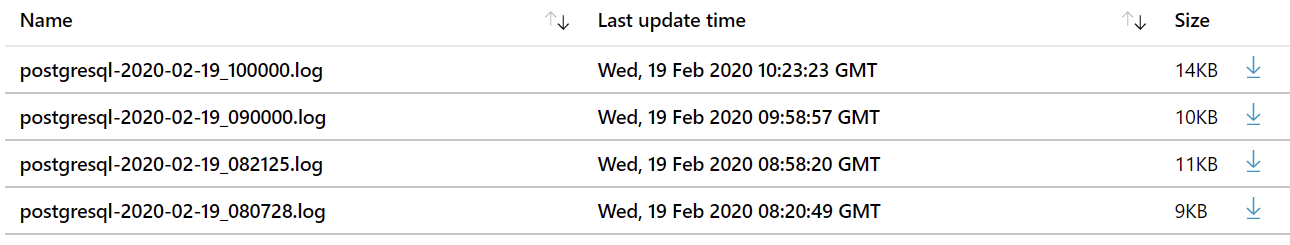

You can set the log retention from it’s default (1 days) to a maximum of 7 days with log_retention_days. To access the logs, click Server logs under Monitoring.

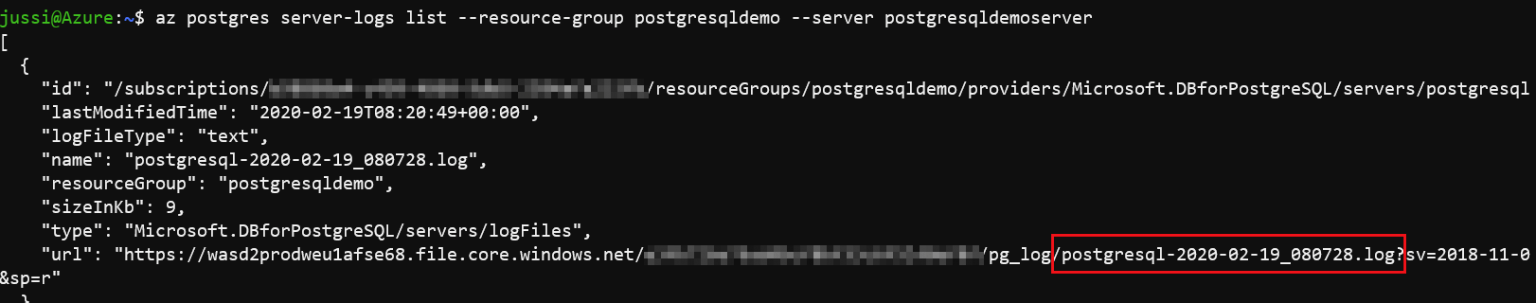

To list logs from the command-line, use the following syntax in Azure CLI:

az postgres server-logs list --resource-group postgresqldemo --server-name postgresqldemoserver

To download this log locally, use the following Azure CLI syntax:

az postgres server-logs download --name postgresql-2020-02-19_080728.log --resource-group postgresqldemo --server-name postgresqldemoserver

In summary

It was refreshing to see how Azure Database for PostgreSQL works and figuring out how to get started with this great database service. I quickly grew to like PostgreSQL’s simplicity and straightforwardness, so I’ll be sure to employ this service in a future project!