Building a monitoring solution for Power Platform events using PowerShell, C#, Azure Log Analytics, and Azure Sentinel

I’m writing this from the beautiful and sunny city of Prague, where I’m attending the European SharePoint, Office 365 and Azure Conference. It’s a 4-day event, with hundreds of sessions, and some very insightful keynotes also.

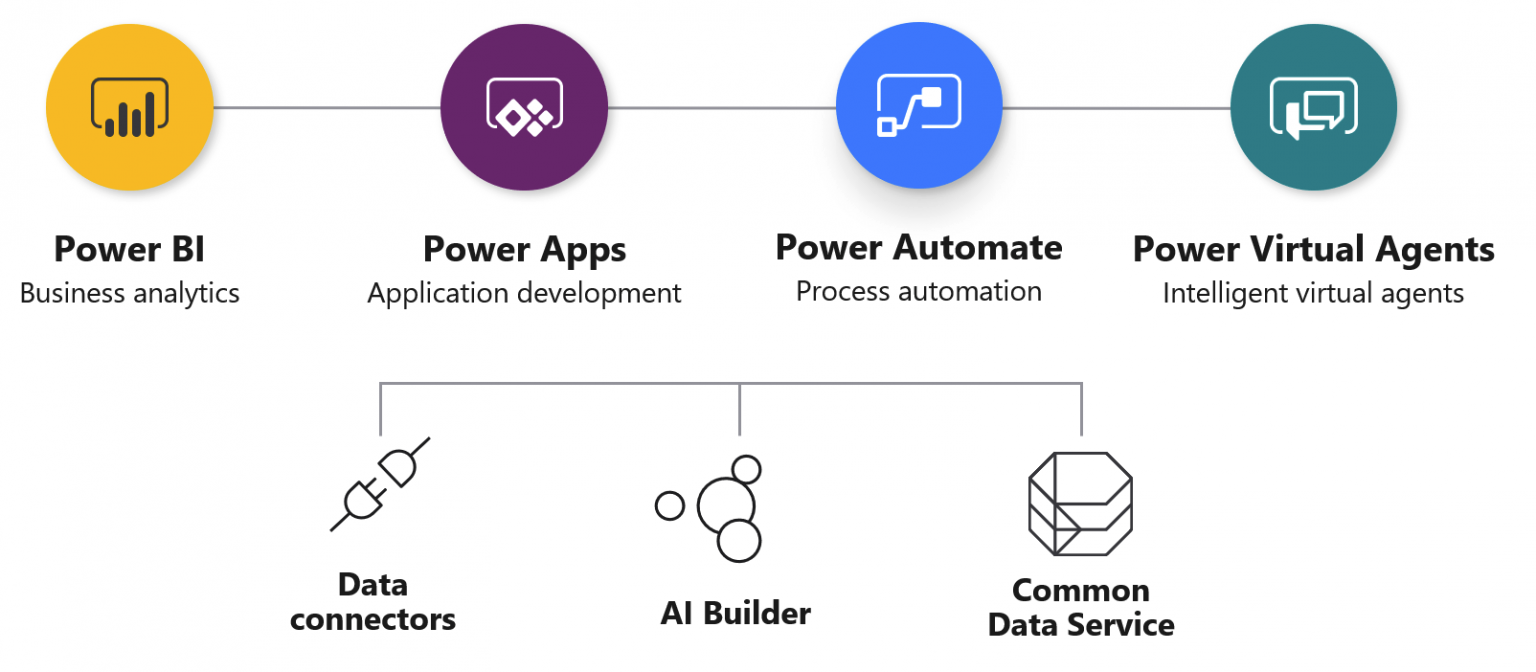

I’m delivering two sessions here, and one of those was on monitoring and managing Power Apps and Power Automate. As you probably know, Power Automate used to be called Microsoft Flow. They are both parts of the Power Platform, which consists of Power BI, Power Apps, Power Automate and Power Virtual Agents.

This image from Microsoft visualizes the platform neatly:

As part of the presentation I delivered with Thomas Vochten, I built a great demo that I wanted to write more about, as I spent quite a bit of time getting it to work.

The problem

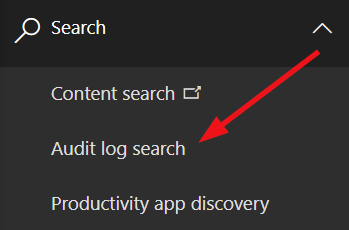

Building monitoring and governance around the Power Platform typically includes auditing events in Office 365. You can access the audit logs through Office 365 Security & Protection Center at https://protection.office.com. You can access the audit logs under Search > Audit log search.

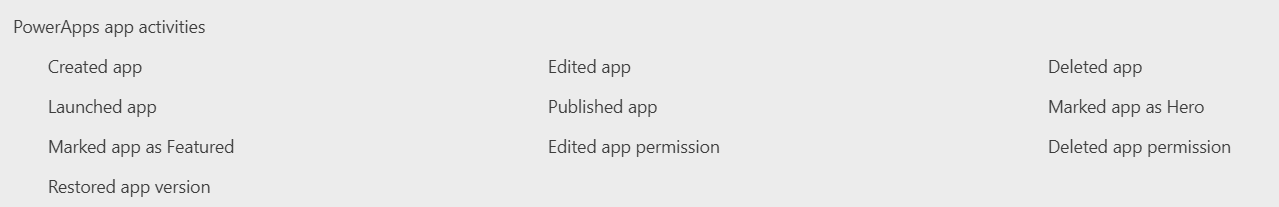

In audit log search, from Activities select all corresponding categories for Power Platform, such as Power Apps and Power Automate (still showing as ‘Flow’) and click Search:

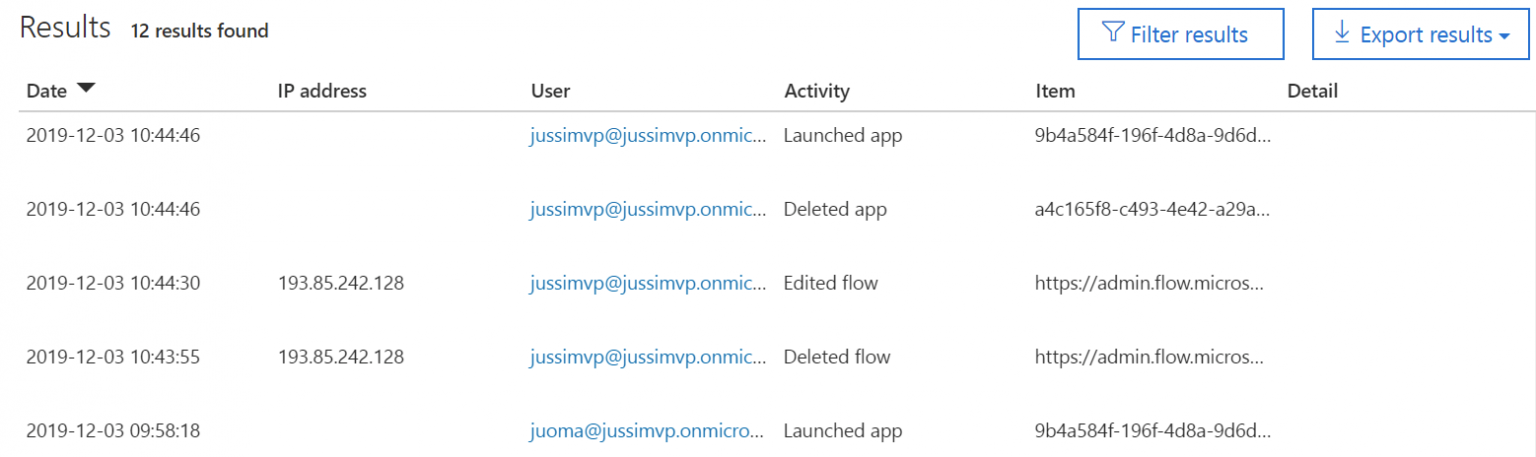

And this results in a list of entries in the audit log:

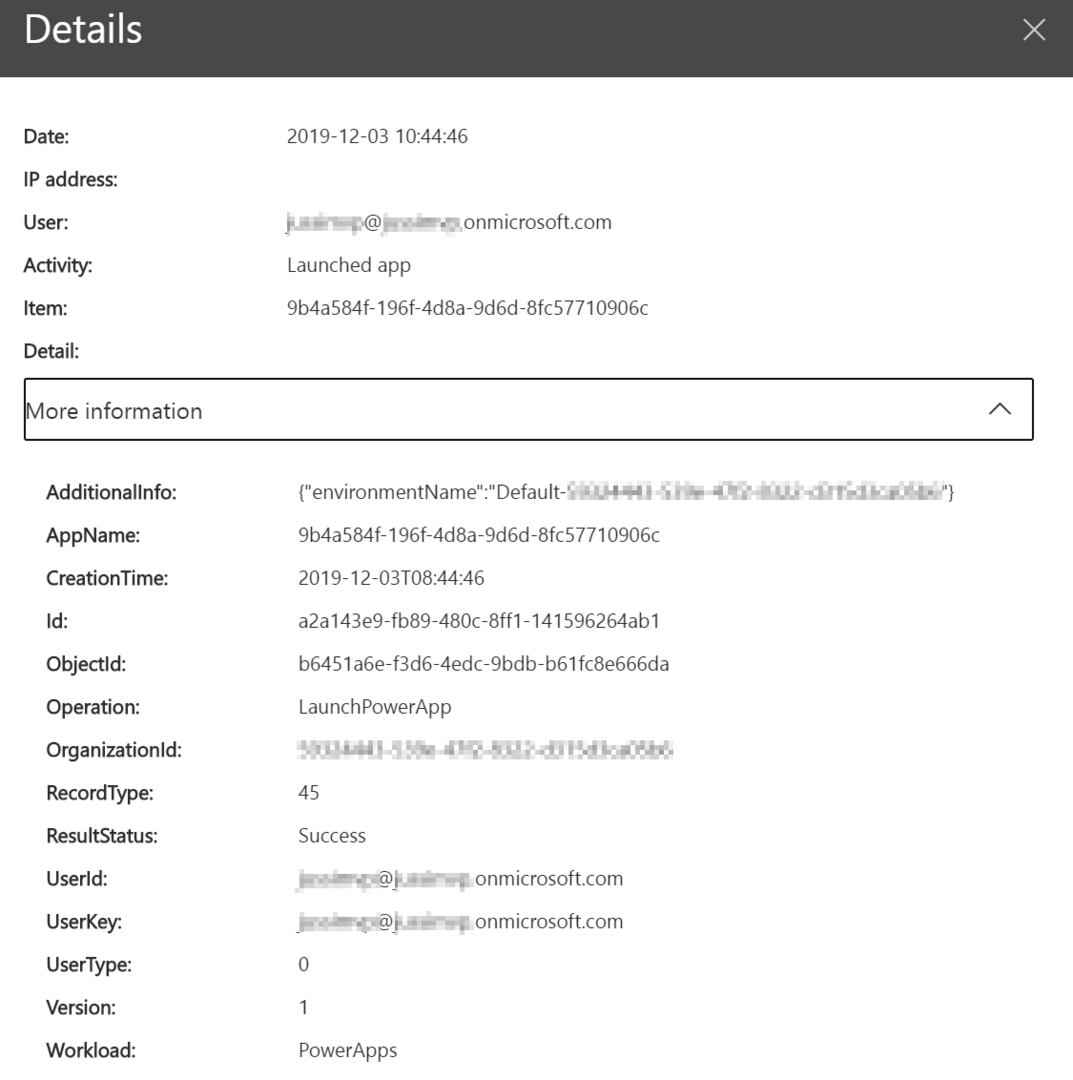

Each item contains plenty of additional details once you click them:

Note: If you are not seeing anything in the logs, despite having events generated, make sure you’ve turned on the auditing feature. See guidance here.

So, what’s the problem here? We can clearly get audited events from the logs and can have longer lunch breaks now.

First, the problem is that one has to dig through these logs manually. They are also not stored indefinitely – typically for up to 90 days when using the typical Office 365 E3 license. If you have Office 365 E5 license you can also retain the logs for up to 1 year.

Second, it would be nice to do something with the logs. There are certain additional solutions one could use, such as employing Microsoft Cloud App Security, which I think is great – but also requires additional licensing, and ramp up for integrating properly.

And here comes the solution!

Building the solution using PowerShell

I wanted to retrieve all audit logs for the Power Platform automatically. I started building this solution by using PowerShell to achieve this. In order to retrieve logs automatically, I need to connect to the Office 365 Management Activity API.

The fine people at SysKit already blogged about this, so I used their PowerShell script mostly as-is, and it just works. There are a couple of important bits here, although the script itself is relatively simple. I fancy the approach where I use something quickly to validate my proposition, and then build out from there. It takes a bit longer to achieve, but I’m also learning a great deal from these exercises.

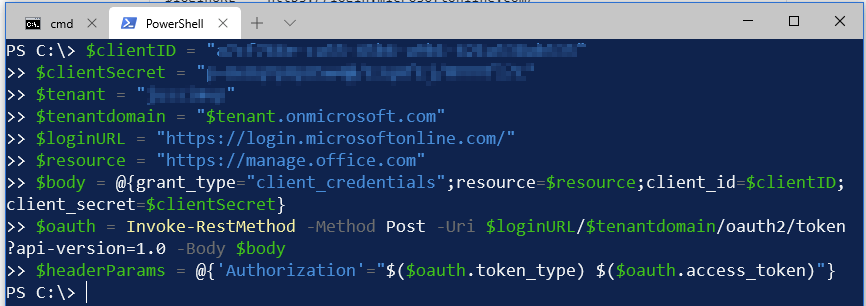

The PowerShell script first needs a few variables. These are taken from the SysKit script directly:

$clientID = "REPLACE_ME"

$clientSecret = "REPLACE_ME_ALSO"

$tenant = "TENANT-NAME"

$tenantdomain = "$tenant.onmicrosoft.com"

$loginURL = "https://login.microsoftonline.com/"

$resource = "https://manage.office.com"

$body = @{grant_type="client_credentials";resource=$resource;client_id=$clientID;client_secret=$clientSecret}

$oauth = Invoke-RestMethod -Method Post -Uri $loginURL/$tenantdomain/oauth2/token?api-version=1.0 -Body $body

$headerParams = @{'Authorization'="$($oauth.token_type) $($oauth.access_token)"}

You need to replace your own values for the first 3 lines: Client ID, Client Secret, and Tenant name. So where do we get these? And why do we need a Client ID and a Client Secret, then?

In order to control who has access to the logs, we need to somehow grant permission for this. This is done by creating a new Azure AD-integrated app, which acts as our vehicle for accessing the logs. So we’re essentially getting OAuth2-tokens, and specifying which sort of access our solution requires.

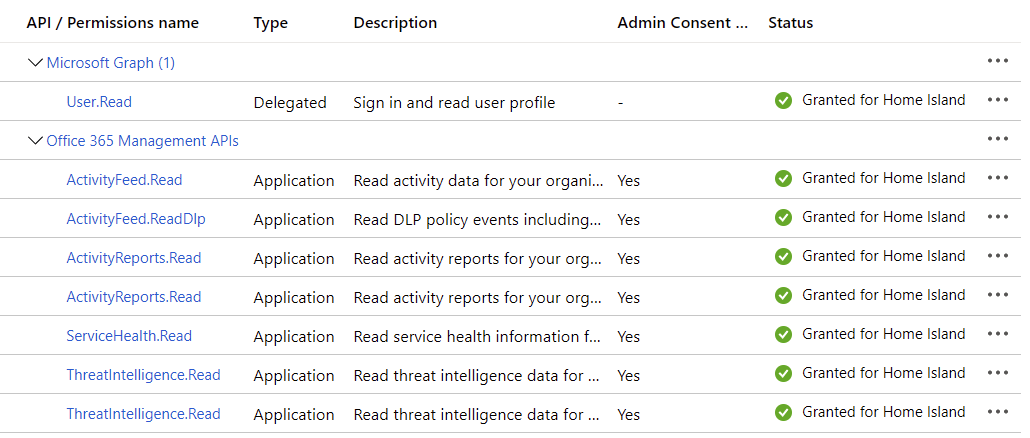

Specific guidance on how to provision the app can be found here. The permissions you’ll need to specify for your Azure AD-defined app are:

This is a bit more than what’s strictly needed, but just to future-proof it, I added Threat Intelligence and Service Health permissions also.

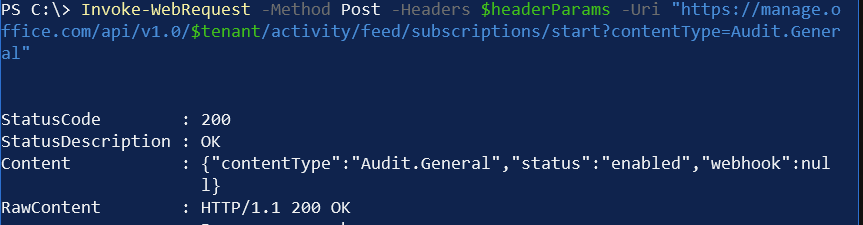

Next, we need the actual logic within the PowerShell. First, a one-liner to call the Office 365 Management Activity API to let it know we’re interested in Audit.General content-type:

Invoke-WebRequest -Method Post -Headers $headerParams -Uri "https://manage.office.com/api/v1.0/$tenant/activity/feed/subscriptions/start?contentType=Audit.General"

How do we know it’s Audit.General? Well, they are listed here, and there are five in total:

- Audit.AzureActiveDirectory

- Audit.Exchange

- Audit.SharePoint

- Audit.General

- DLP.All

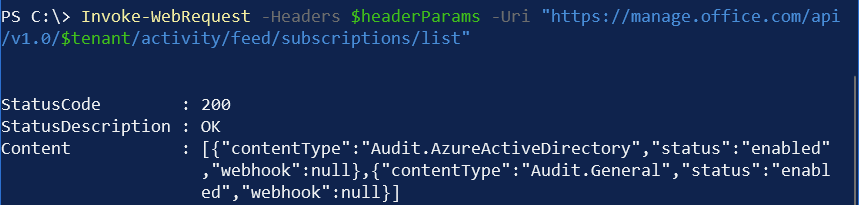

Audit.General holds all general items, including Power Platform audit data. Next, we can verify which content types we’re subscribed to:

Invoke-WebRequest -Headers $headerParams -Uri "https://manage.office.com/api/v1.0/$tenant/activity/feed/subscriptions/list"

And now we can finally get to retrieving our logs! We need to specify an exact point of time where to look for the logs, and it can only contain a maximum of 24 hours of data, and only from the last 7 days.

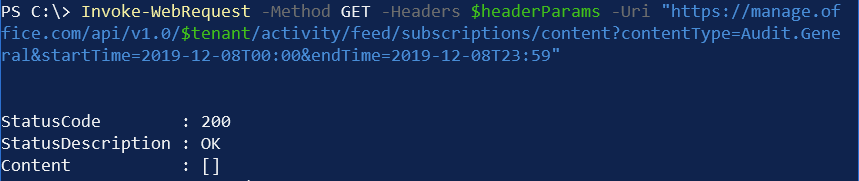

Invoke-WebRequest -Method GET -Headers $headerParams -Uri "https://manage.office.com/api/v1.0/$tenant/activity/feed/subscriptions/content?contentType=Audit.General&startTime=2019-12-01T00:00&endTime=2019-12-01T23:59"

As you can see, the Content property doesn’t contain anything. Should we have any data, the property would contain a JSON payload. It often takes a few minutes for entries to be written to the audit logs, so don’t panic if you don’t see anything even after generating a few entries in the log.

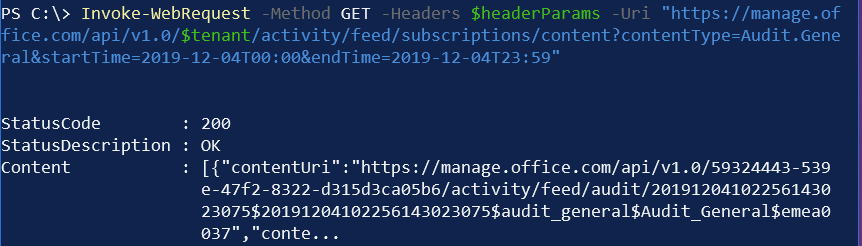

You’ll need to inspect the JSON content to figure out what log you’d like to request.

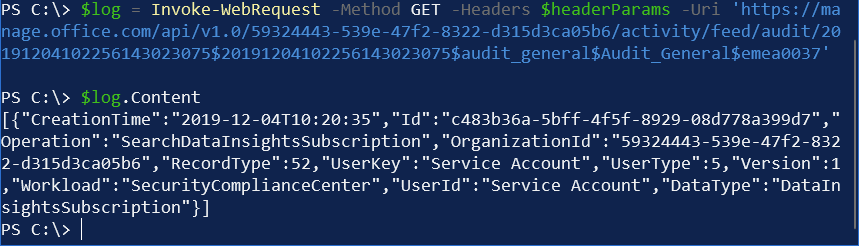

To request a specific log, use the following PowerShell:

Invoke-WebRequest -Method GET -Headers $headerParams -Uri 'https://manage.office.com/api/v1.0/59324443-539e-47f2-8322-d315d3ca05b6/activity/feed/audit/$blob'And replace $blob with the exact blob address from the previous payload. This is typically in the form of ‘20191201111711914008706$20191201112756564089808$audit_general$Audit_General$emea0037‘.

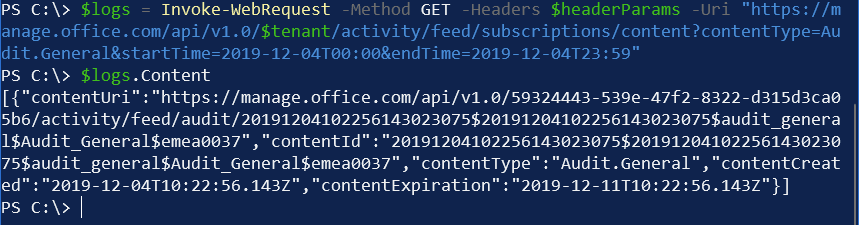

And again, the Content property holds the actual content. In this example, I’m getting data from December 4 (midnight to 23:59), and finding one entry from this period:

[{

"CreationTime": "2019-12-04T10:20:35",

"Id": "<guid>",

"Operation": "SearchDataInsightsSubscription",

"OrganizationId": "$tenant-id",

"RecordType": 52,

"UserKey": "Service Account",

"UserType": 5,

"Version": 1,

"Workload": "SecurityComplianceCenter",

"UserId": "Service Account",

"DataType": "DataInsightsSubscription"

}

]There we go!

But, it’s quite awkward to try and parse these logs over an interactive PowerShell session. So what I did next was to build small automation, that extracts logs every day and pushes them to Azure Log Analytics.

Building the solution using C# and Azure Log Analytics

I figured I can have a small piece of code run somewhere in the cloud, that extracts my audit logs from Office 365, and pushes them to Azure. For storing my data the obvious choice is Azure Log Analytics in Azure.

If you’re not familiar with Azure Log Analytics, you should read this short primer first. In essence, Azure Log Analytics allows us to create a place where any sort of logs can be pushed – from Azure services, as well as from other services. Then these logs can be queried using the built-in KQL query language. And when we add Azure Sentinel on top of a Log Analytics Workspace, we can have better security monitoring and trigger events based on the findings. I wrote about Azure Sentinel earlier this year, and you can find a comprehensive guide here.

Azure Log Analytics exposes a neat REST API, allowing us to push custom events to a workspace. My fellow co-host from the Ctrl+Alt+Azure podcast, Tobias Zimmergren, has thankfully done the heavy lifting for us already. He built a wrapper for these calls to Azure Log Analytics using C#, and it’s documented here. The source code is available on GitHub for his wrapper.

I had a look at the code, and it’s great, and the wrapper is easy to use. In essence, you only need to call the SendLogEntry() method:

logger.SendLogEntry(new ActivityEntity

{

Operation = eventDetail.Operation,

SourceSystem = eventDetail.Workload,

AppName = eventDetail.AppName,

User = eventDetail.UserKey,

Status = eventDetail.ResultStatus,

Environment = eventDetail.AdditionalInfo,

CreationDate = eventDetail.CreationTime

}, "PowerPlatformLog");What I ended doing is replicate the previous PowerShell-script logic in C#. I didn’t have much time to build this, so the code is far from great, but it works – and I guess that’s the point here.

First, I’m defining the log data structure in a class:

public class ActivityEntity

{

public string Operation { get; set; }

public string SourceSystem { get; set; }

public string AppName { get; set; }

public string Environment { get; set; }

public string User { get; set; }

public string Status { get; set; }

public DateTime CreationDate { get; set; }

}I could gather more data, but it’s more than sufficient for my needs for now. Next, I’ll need to specify my OAuth2 details for gaining permissions to read the Office 365 Management Activity API:

var tenantId = "TENANT_ID_GOES_HERE";

var clientId = "CLIENT_ID_GOES_HERE";

var secret = "CLIENT_SECRET_GOES_HERE";I need to specify my Azure Log Analytics workspace ID and key also:

LogAnalyticsWrapper logger = new LogAnalyticsWrapper(

workspaceId: "WORKSPACE_ID_GOES_HERE",

sharedKey: "SHARED_KEY_GOES_HERE");

I’m planning to run my code once a day, so I’ll pick all entries for the current day:

CultureInfo invC = CultureInfo.InvariantCulture;

string today = DateTime.Today.ToString("yyyy-MM-ddTHH:mm", invC);

string midnight = DateTime.Today.AddDays(1).AddSeconds(-1).ToString("yyyy-MM-ddTHH:mm", invC);Wire-up for the message handler, this is as-is from Tobias’ code:

var messageHandler = new OAuthMessageHandler(tenantId, clientId, secret, new HttpClientHandler());Next, I’m initiating the first HTTP GET call to Office 365 Management Activity API:

using (HttpClient httpClient = new HttpClient(messageHandler))

{

httpClient.BaseAddress = new Uri("https://manage.office.com");

httpClient.Timeout = new TimeSpan(0, 2, 0); //2 minutes

string endpoint = $"/api/v1.0/tenantId/activity/feed/subscriptions/content?contentType=Audit.General&startTime=" + today + "&endTime=" + midnight;

HttpRequestMessage message = new HttpRequestMessage(new HttpMethod("GET"), endpoint);

var resp = httpClient.SendAsync(message, HttpCompletionOption.ResponseHeadersRead).Result;

var response = resp.EnsureSuccessStatusCode();

dynamic items = JsonConvert.DeserializeObject(response.Content.ReadAsStringAsync().Result);And as I’m expecting to get a JSON payload back, I’m deserializing the response. I’m then looping through each item, as each item might hold interesting data (links to blobs):

foreach (dynamic item in items)

{

var itemContent = item.contentUri;

endpoint = itemContent;

message = new HttpRequestMessage(new HttpMethod("GET"), endpoint);

resp = httpClient.SendAsync(message, HttpCompletionOption.ResponseHeadersRead).Result;

response = resp.EnsureSuccessStatusCode();

dynamic eventDetails = JsonConvert.DeserializeObject(response.Content.ReadAsStringAsync().Result);

And once again I’m deserializing that payload. And now it’s trivial to pick up the content we’re interested in – the workload is called PowerApps or MicrosoftFlow:

try

{

foreach (dynamic eventDetail in eventDetails)

{

if (eventDetail.Workload == "PowerApps" || eventDetail.Workload == "MicrosoftFlow")

{

Console.Write("Logging: {0}\t", eventDetail.Workload);

logger.SendLogEntry(new ActivityEntity

{

Operation = eventDetail.Operation,

SourceSystem = eventDetail.Workload,

AppName = eventDetail.AppName,

User = eventDetail.UserKey,

Status = eventDetail.ResultStatus,

Environment = eventDetail.AdditionalInfo,

CreationDate = eventDetail.CreationTime

}, "PowerPlatformLog");

Console.WriteLine("Done");

}

else

{

Console.WriteLine("Skipped: " + eventDetail.Workload);

}

}

}

catch (Exception ex)

{

Console.WriteLine("Error: " + ex.ToString());

}And that’s it! I used a very rudimentary way to troubleshoot my initial version, as I wasn’t sure what sort of data I’m getting.

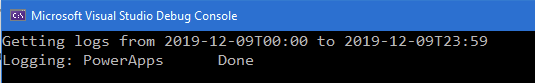

The first run gets one entry in the logs:

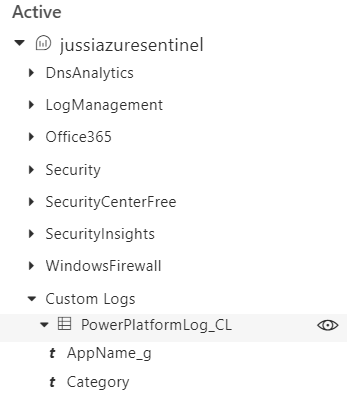

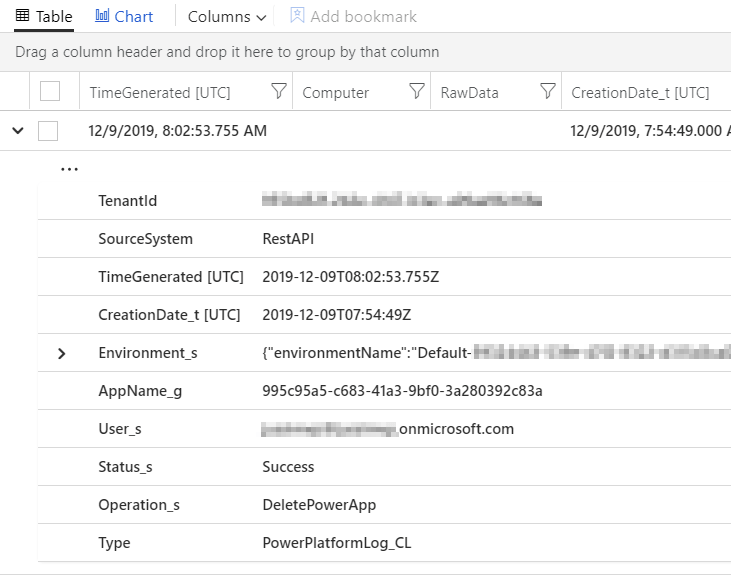

Switching to Azure Log Analytics, we should now see a custom log category called PowerPlatformLog:

You don’t even need to learn to query it, just click on the small eye-shaped icon next to the category, and it auto-generates a sample query:

Run the query and presto! We’re getting our custom log events in Azure Log Analytics from Office 365 Management Activity API:

You could schedule this code in Azure Functions, Azure WebJobs, a custom container in Azure Container Instances, or even in a virtual machine.

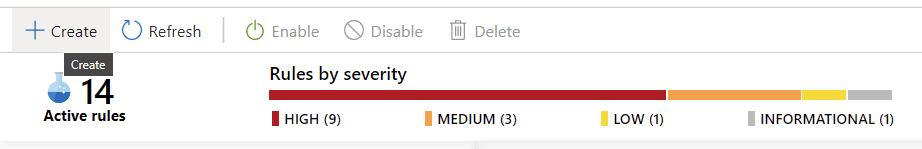

Finally, switch to Azure Sentinel and click Analytics > Create to create a new rule for our custom events. Select Scheduled rule.

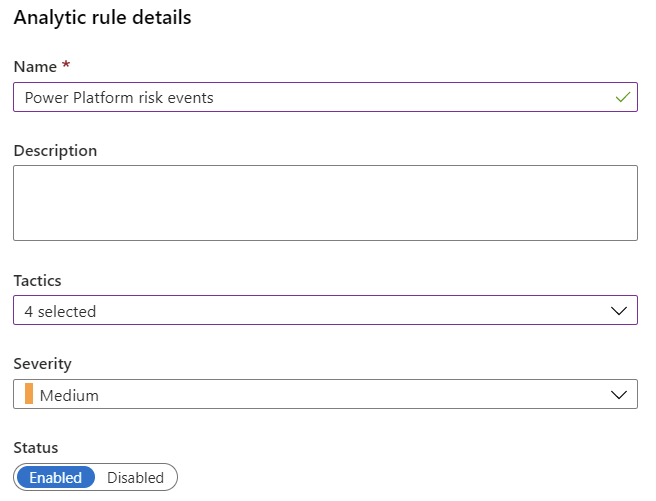

You can define the rule details in the first step:

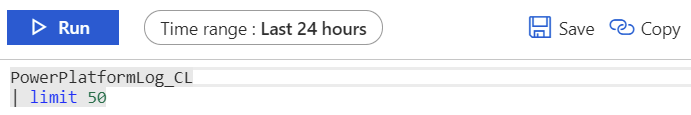

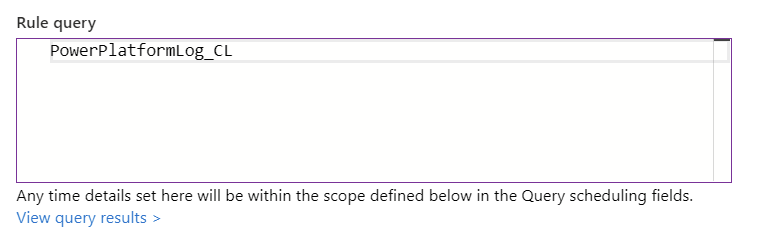

In the next screen, you’ll need to specify a query. If you’re not sure what to query, just query everything with PowerPlatformLog_CL:

You could also map your query results to entities. I chose not to do this for now, as I wanted to create a rule for everything generated from the Power Platform. With entities, one could easily query for certain aspects of logs, such as events from specific users, environments or services.

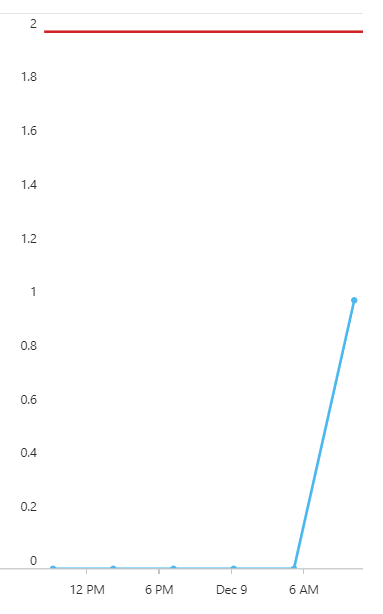

I set the alert threshold to “Is greater than 2“, so any two events from Power Platform would result in an alert:

Finally, the alert will call a playbook. These are Azure Logic Apps-based orchestrations, that essentially allow you to perform any sort of activity. You can create one first, and it gives you a few templates to choose from:

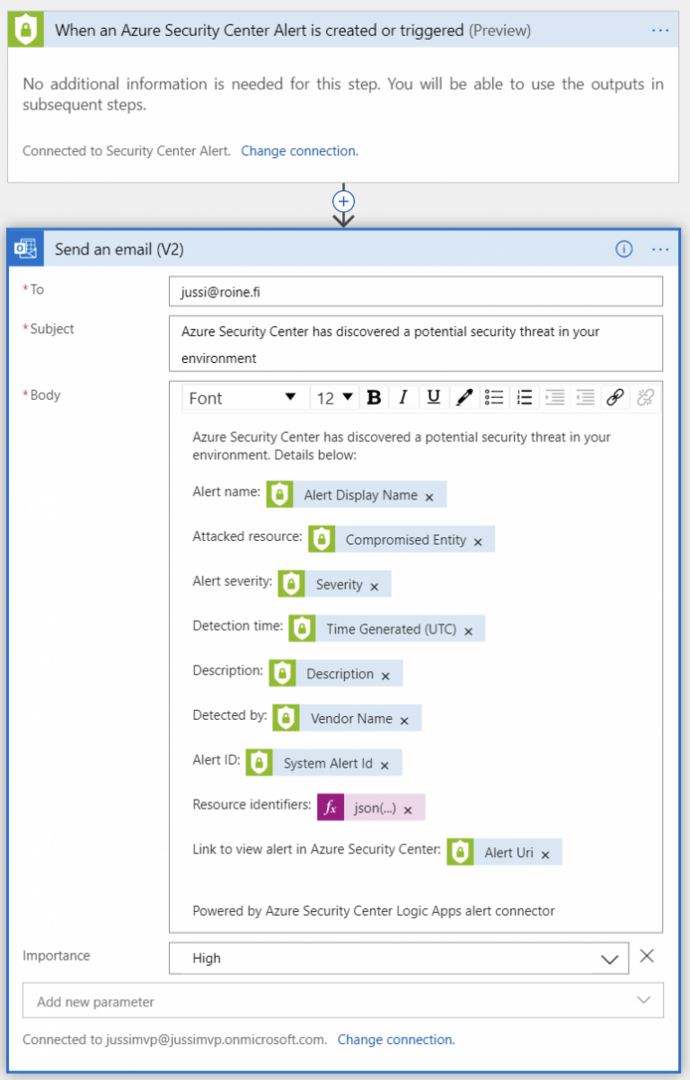

These are simple email notification, but at least there’s a template! I next-next-clicked through the notification template and it’s very simple:

And that’s it!

In summary

Building this solution was a lot of fun. It was also educating, although all the individual pieces and parts of the solution were something I’m familiar with.

The Office 365 Management Activity API is interesting, and I find it highly useful. The flexibility of Azure Log Analytics was a surprise, even if I thought I knew one can push custom events to it. And finally adding Azure Sentinel to the solution made it that much more interesting.