Modernizing file transfer integrations in Microsoft Azure

File transfers, where we needed to move data back and forth between two environments, were the bread and butter early in my IT career in the 1990s. The usual candidates and culprits were Xcopy, Robocopy, SCP, FTP, FTPS, and SFTP. I'm forgetting the use of physical memory on purpose.

Building integrations primarily for moving files is less common today, but is still often needed. In this blog post, I'll briefly examine the options for modernizing and building file transfer-based integrations utilizing Microsoft Azure.

The need for moving files between environments

Despite modern technology's capabilities, one thing seems to have remained constant throughout the decades: moving files from one server or platform to another. These files are often CSV, XML, or JSON files; thus, typically, data is automatically ingested and processed in batches.

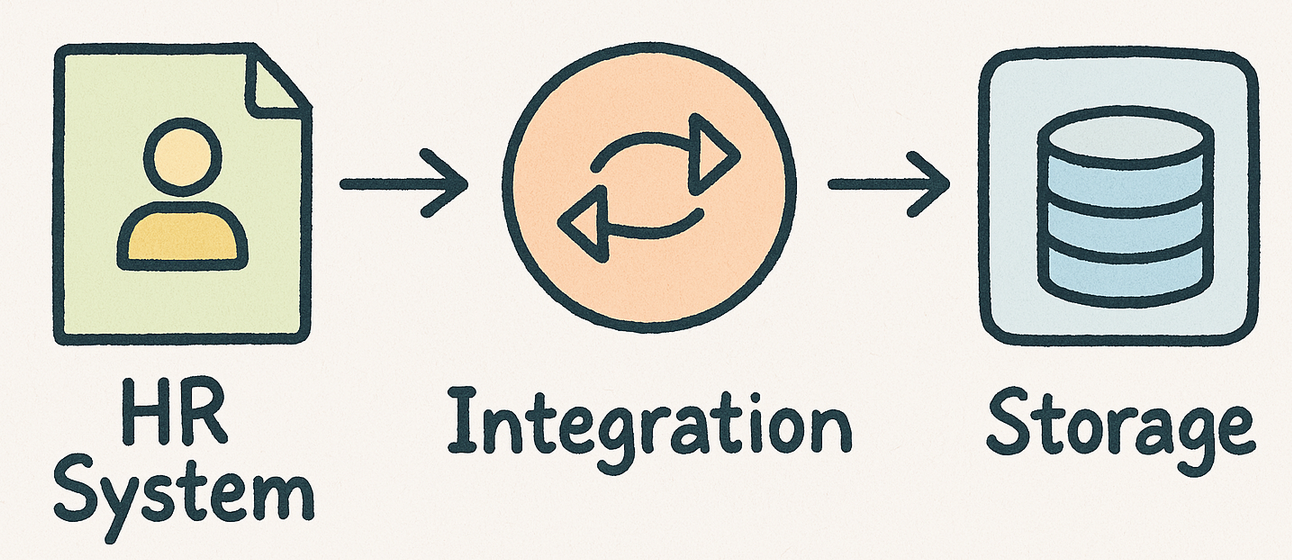

Files are generated in a source system, an HR platform acting as the source of truth for identities and access management. Each day, it generates files distributed across dependent systems to run as part of their scheduled processes. This is especially prevalent in semi-disconnected and often cloud-based setups, where a strong dependency on legacy systems in on-premises networks is still usual and expected. Thus, the expected approach is to move data as files in a scheduled fashion.

FTP and SFTP are (still) here

I find it amusing that even the most non-technical person can often say, "We should do FTP here," without fully understanding what that would entail.

In 2025, FTP will still be very much alive. The unencrypted classic FTP and more modern iterations like SFTP and FTPS. These are still superior ways to transfer almost any number of files, regardless of their size, between two systems. Often, there is a component in charge of integration included:

In enterprise environments, the integration piece I usually see is a convoluted state machine that allows you to define batch jobs, such as FTP-based file copy. Defining a new batch job takes time and effort, and people affectionately call the system by a nickname.

SFTP is simultaneously the peak of modern file transfers, yet we've already had it for a decade or three. It is well understood, practically secure, trustworthy, and reliable. With SFTP, larger companies usually require a public-private key pair (e.g., an SSH key) to manage authentication and related security centrally.

What about FTP, then? If SFTP is encrypted, hasn't it superseded older FTP implementations? I thought so, too, but these file transfers are still alive. One reason is that many source systems perhaps only support FTP for pushing files out, or they expose an FTP endpoint that makes little sense to modernize unless the whole system is replaced forcefully.

SFTP solution on Azure

Microsoft Azure's native solution for exposing an SFTP endpoint is part of Azure Storage. It's cloud-native, thus not requiring you to provision virtual machines or handle identities in Active Directory.

(I've previously written about this solution using a more technical approach here.)

SFTP on Azure Storage allows you to utilize SSH keys, and it's easy to configure. In addition, due to the nature of storage in Azure, securing connectivity and managing logging are well understood.

The only reasonable downside of this solution is cost. Enabling SFTP on a storage account will add a hefty $219 per month, on top of the usual data operations and transfer fees. Theoretically, you'd only need to deploy this once, and then you'd segregate the needs between each source system. This is rarely the case in practice for many reasons: regulations, data isolation, logging, etc.

A cheaper but more cumbersome option is to deploy an SFTP service in a virtual machine. You'd then only pay for the virtual machine (and relevant egress fees), which would cost less than $219 monthly. While this gives a bit more flexibility, it also adds the overhead of managing the virtual machine, the SFTP configuration, and monitoring security and access, among other things. Still, it's a solution I often see, as it closely resembles how solutions like these were built in the day before the public cloud.

What about Microsoft Fabric?

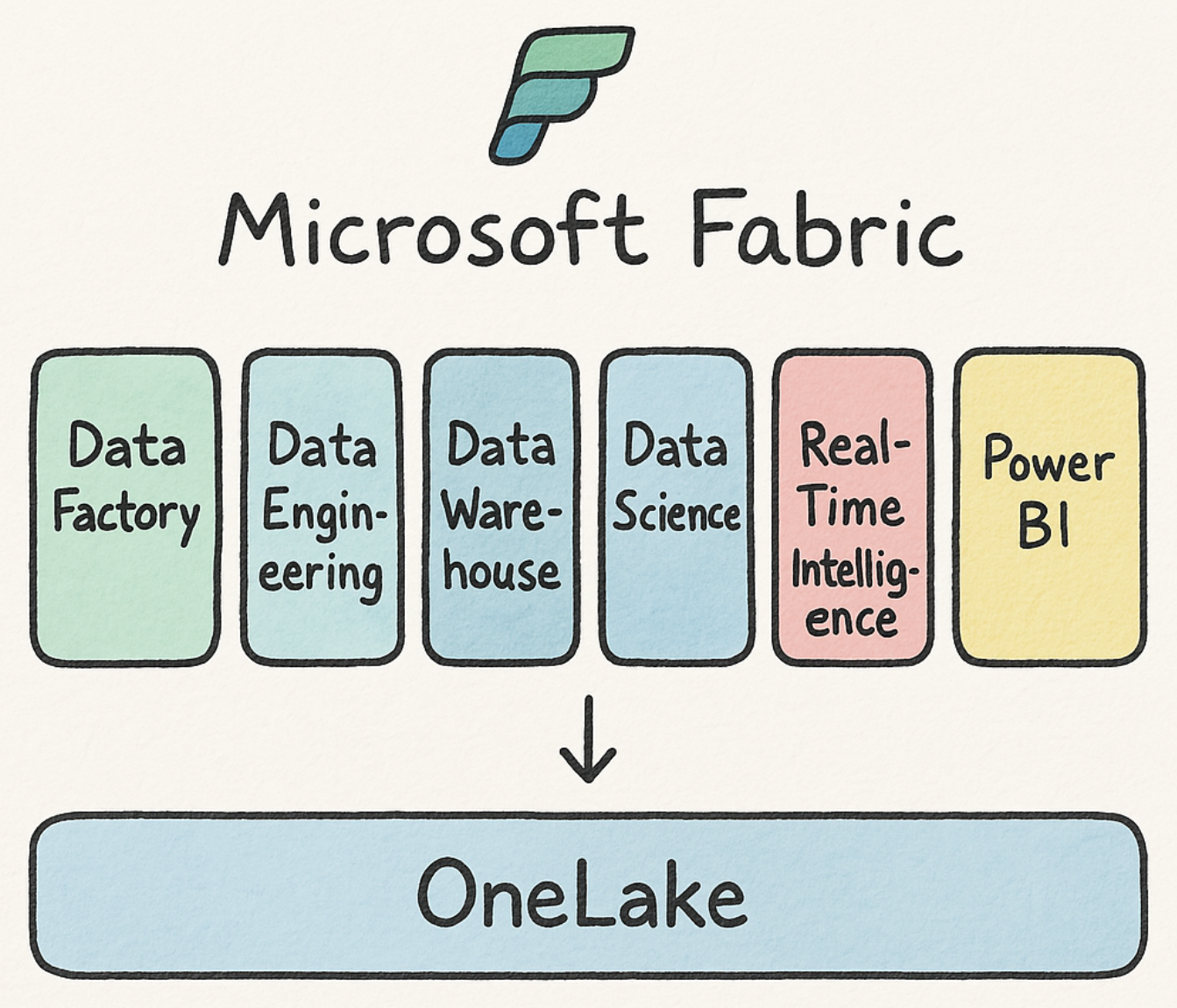

A few years ago, Microsoft announced Fabric. I didn't fully grasp this magnitude, as it seemed like another data warehouse solution. Since then, people with vastly more experience in Fabric have lectured me, for a reason, on why Fabric will converge any data and integrations capabilities in one suite.

Fabric can be utilized for file integrations, using the Data Factory capabilities. This, in turn, allows you to use FTP or SFTP in a more advanced way for complex integrations, automatic file copies, and transformations.

However, if your business case revolves more around moving the files and less about benefiting from Fabric's other capabilities, it might be too overkill. While Fabric can be very affordable, starting at just $321 a month, it's too costly for mostly simplistic file transfer integrations, as managing and administering the instance requires time and skills.

How about Logic Apps?

Another option, which is used perhaps for more specific needs, is utilizing Logic Apps from Azure. It's an integration and orchestration capability, allowing you to build complex workflows.

The beauty of Logic Apps is that it's essentially a low-code approach to describing and executing workflows. If you choose, these workflows can then connect to different systems to transfer files. One of the connectors supports on-premises file shares, for example. Overall, Logic Apps is a beautiful way to implement rapid integration. In the long term, however, you'll need proper governance, an Infrastructure as Code approach, and a design to avoid dozens of workflows that must be individually governed.

Logic Apps can also connect with file transfer services, and can thus be used as the automation engine between your source and destination systems.

Workflows can be triggered in multiple ways, and business logic can easily be adjusted to changing requirements. Logic Apps is one of my go-to rapid prototyping capabilities, before diving into custom code or more costly endeavours in integration needs.

In closing

I remember reading that about 80% of business IT systems revolve around a single need: moving data from one place to another, typically from one database to another, using a graphical interface. Maybe so, still in 2025, and it's imperative to understand the different core capabilities for building integrations that aim to move and/or transform files and data between systems.

My advice is to analyze the long-term needs and start small, carefully. Microsoft Fabric seems to graduate fast as the go-to tool for many professionals, and that's fine. Remember that sometimes simple is great; you can always grow from there.

Reach out if I can help

I work as a Senior Security Architect at a company I co-founded, Not Bad Security. Feel free to reach out to me, if I can assist you with any Microsoft security related deployments, assessments or troubleshooting.