Governing Generative AI with Data Security Posture Management

We read about innovations, advancements, and capabilities that generative AI-based technologies provide each week. Many of these are related to ChatGPT, Microsoft's Copilot workloads, Google's Gemini, and similar technologies—in a mostly fun way, like generating new images.

People are inevitably flocking to try out and test new and exciting capabilities. Therefore, organizations must determine how to best govern AI services, as these services might inadvertently put their data and businesses at risk.

In this article, I'll look at how and why Data Security Posture Management for AI is fast becoming a crucial tool for businesses of any size.

What is Data Security Posture Management?

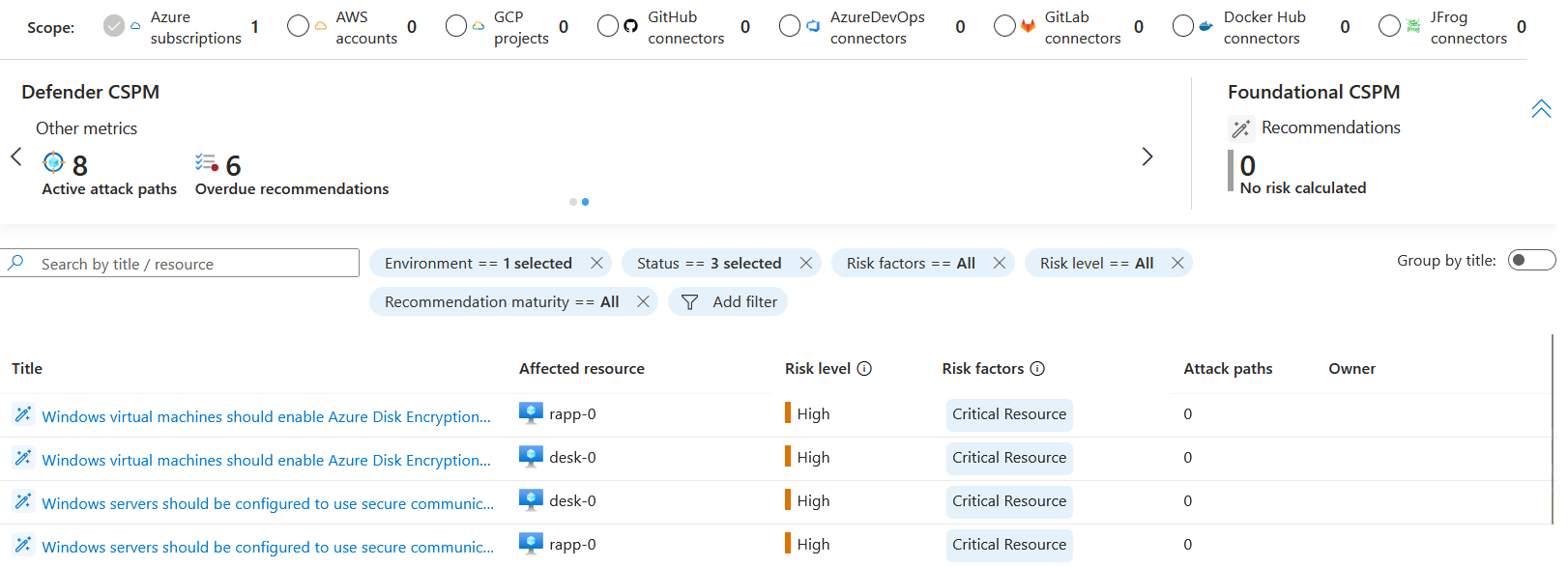

Security Posture Management comes in many shapes and forms. Cloud Security Posture Management (CSPM) is generally utilized to gather all cloud platform-related security aspects, such as security controls, vulnerabilities found, tracking and inventory, and mitigations. A fine example of CSPM would be Microsoft's Defender for Cloud's CSPM capabilities:

The image above shows that a given Azure environment (with one subscription) has eight active (possible) attack paths. Thus, CSPM gives us a thorough view of what's deployed and what sort of security posture we're looking at.

CSPM requires onboarding environments and assets through agents, proxies, and scans to form a comprehensive platform picture.

DSPM is the sibling aspect of CSPM—what data do we have, how is it utilized, and what security posture do we have across the board? More specifically, DSPM for AI drills further down on all generative AI-related workloads, data, services, and platforms.

Understanding DSPM for AI capabilities

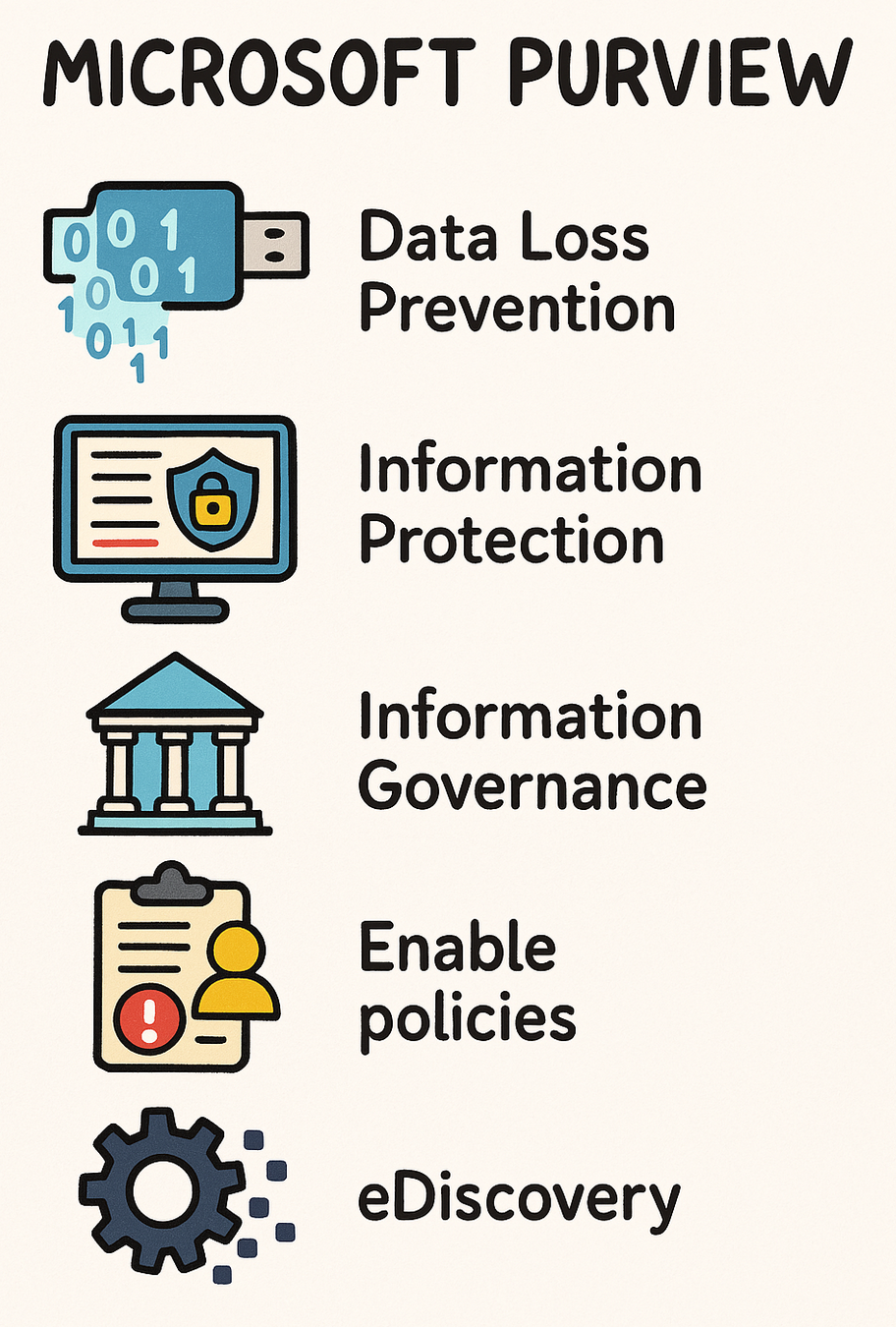

Microsoft has DSPM for AI capability as part of its Microsoft Purview services stack. Purview includes around 15 somewhat intertwined services on all aspects of data security, governance, risk, and compliance. It's a beast, and not all its capabilities are usually deployed - at least in one go.

To highlight a few, the services in Purview include Data Loss Prevention (DLP), Information Protection (MIP), and Information Barriers.

DSPM for AI focuses explicitly on all generative AI-related workloads and data: activity, policies, data assessments, and compliance controls. We're still in the relatively early days of commercial gen AI capabilities. Yet, it's essential to start governing these services, regardless of whether they reside within the company's platforms or as third-party offerings.

I usually see DSPM for AI being considered for managing the risk of employee usage of gen AI services. "What data is being sent to ChatGPT?" would be a topical question. Many companies have blocked access to these popular services flat out, while others turn a blind eye and hope nothing sensitive is passed on to external parties. That's where DSPM for AI would come into play: let's reveal the state and act accordingly.

What can we achieve with DSPM for AI?

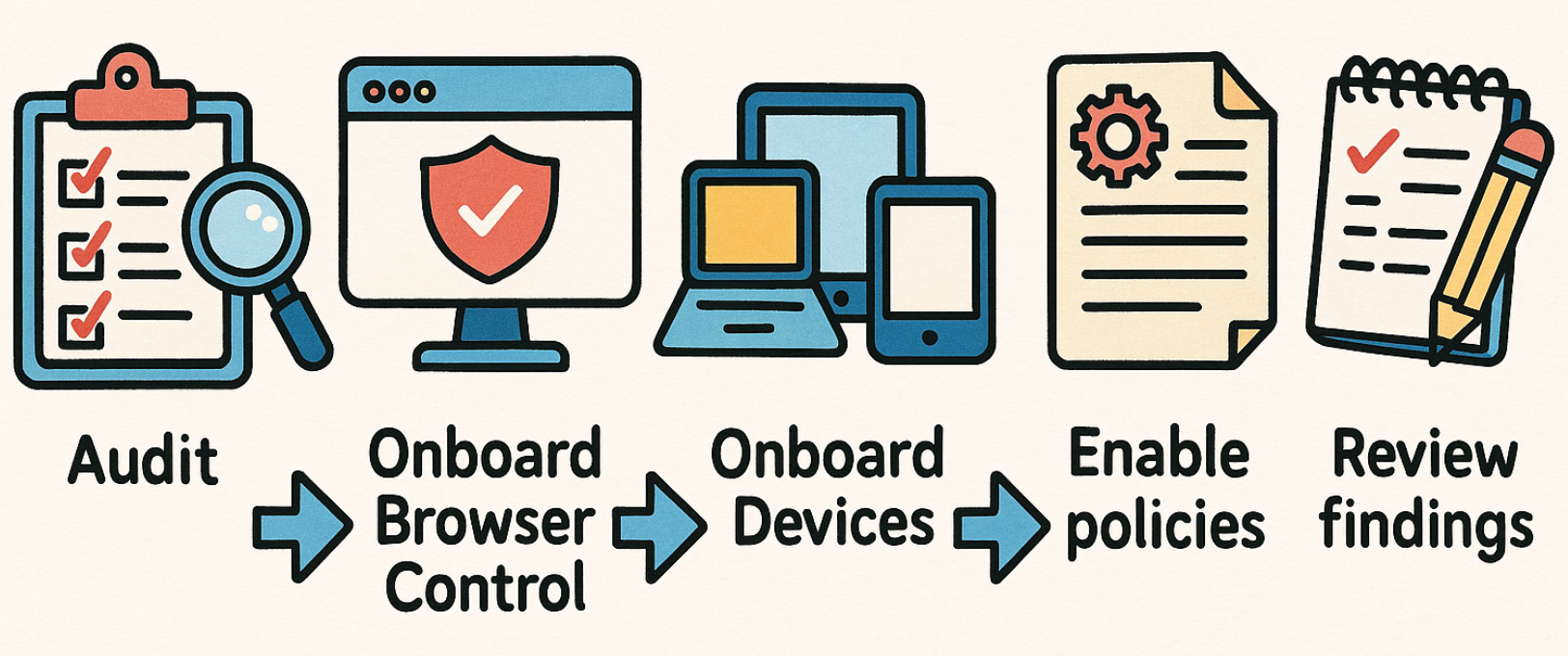

First, we must onboard user devices and browsers to monitor the traffic effectively. Microsoft obviously had capabilities for this before DSPM for AI was available, so we'll subsequently piggyback on top of Defender for Endpoint and Intune. We'll also deploy browser extensions that feed us the information from user browser sessions.

We can then define policies - predefined safeguards that allow us to detect unethical content, sensitive prompts, and otherwise risky AI usage. Once all of this is done, we can review the findings, and these reports will feed into our overall risk and compliance model and appetite.

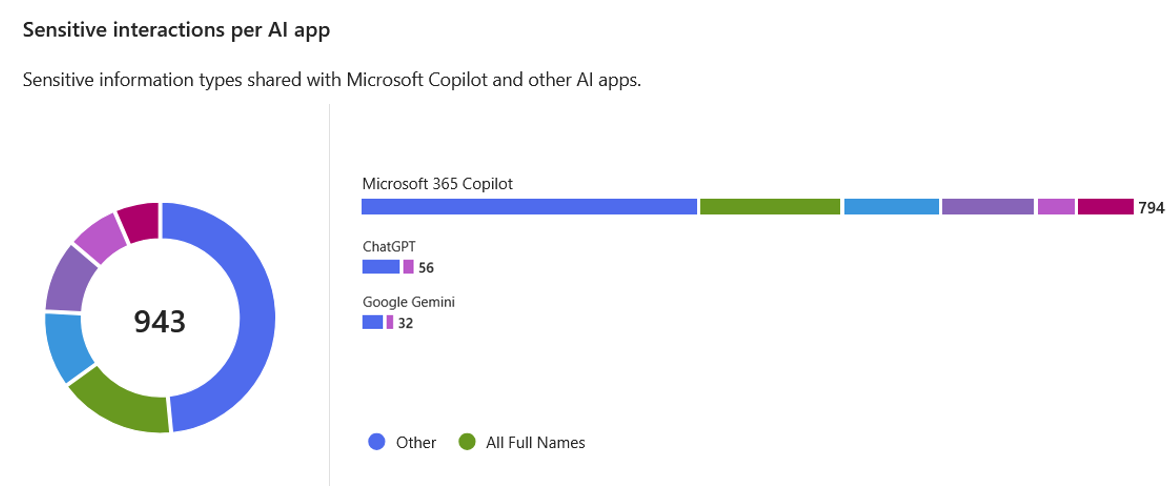

The report's snapshot above shows the usage of Microsoft 365 Copilot, ChatGPT, and Google Gemini. Drilling down would allow us to find evidence of what data was passed on and whether there is a risk exposure.

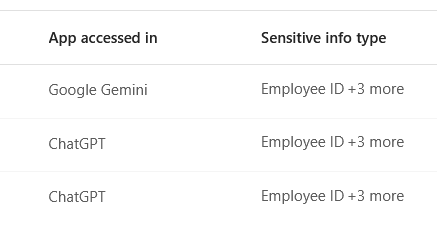

Detailed info includes detected data sent to external gen AI services, such as ChatGPT.

Risk and compliance management

While DSPM for AI provides a reasonably comprehensive view of all end-user devices and their respective usage to gen AI, the overall risk, compliance management, and appetite must be set elsewhere.

Companies typically set clear baselines on risk mitigation, exceptions, and acceptance, and these baselines have to be aligned with DSPM for AI (as well as CSPM and similar capabilities). DSPM for AI gives us somewhat processed data, but decisions and mitigating actions are made in a different context.

In closing

While DSPM for AI gives us visibility into how generative AI workloads are being used and utilized, it's just one capability in a longer process to governance and managing risk and compliance.

Start by assessing the current AI workloads and creating clear guidelines on how AI should be used within the organization. Then, enroll DSPM for AI as one key piece in understanding and assessing the current situation.

Additional information

- DSPM for AI (Microsoft)

Reach out if I can help

I work as a Senior Security Architect at a company I co-founded, Not Bad Security. Feel free to reach out to me, if I can assist you with any Microsoft security related deployments, assessments or troubleshooting.