Deploying an Enterprise ChatGPT with Azure OpenAI

I've previously written on using Azure OpenAI, which is fascinating and immensely useful. One thing that companies often ask is "ChatGPT for the enterprise." The thinking here is with data and privacy – companies would prefer employees use "our" ChatGPT and not the "other" ChatGPT (i.e., the OpenAI ChatGPT service). There is nothing wrong with this, yet building a custom service to mimic a widely used and praised service requires a lot of effort.

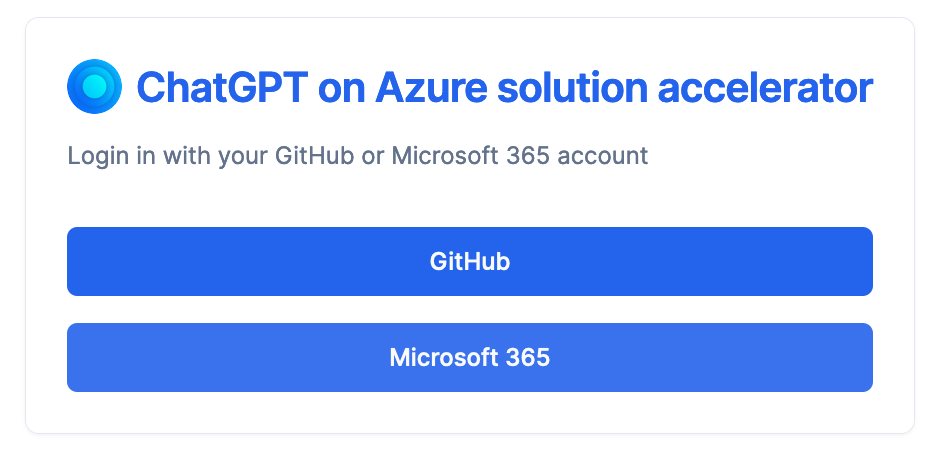

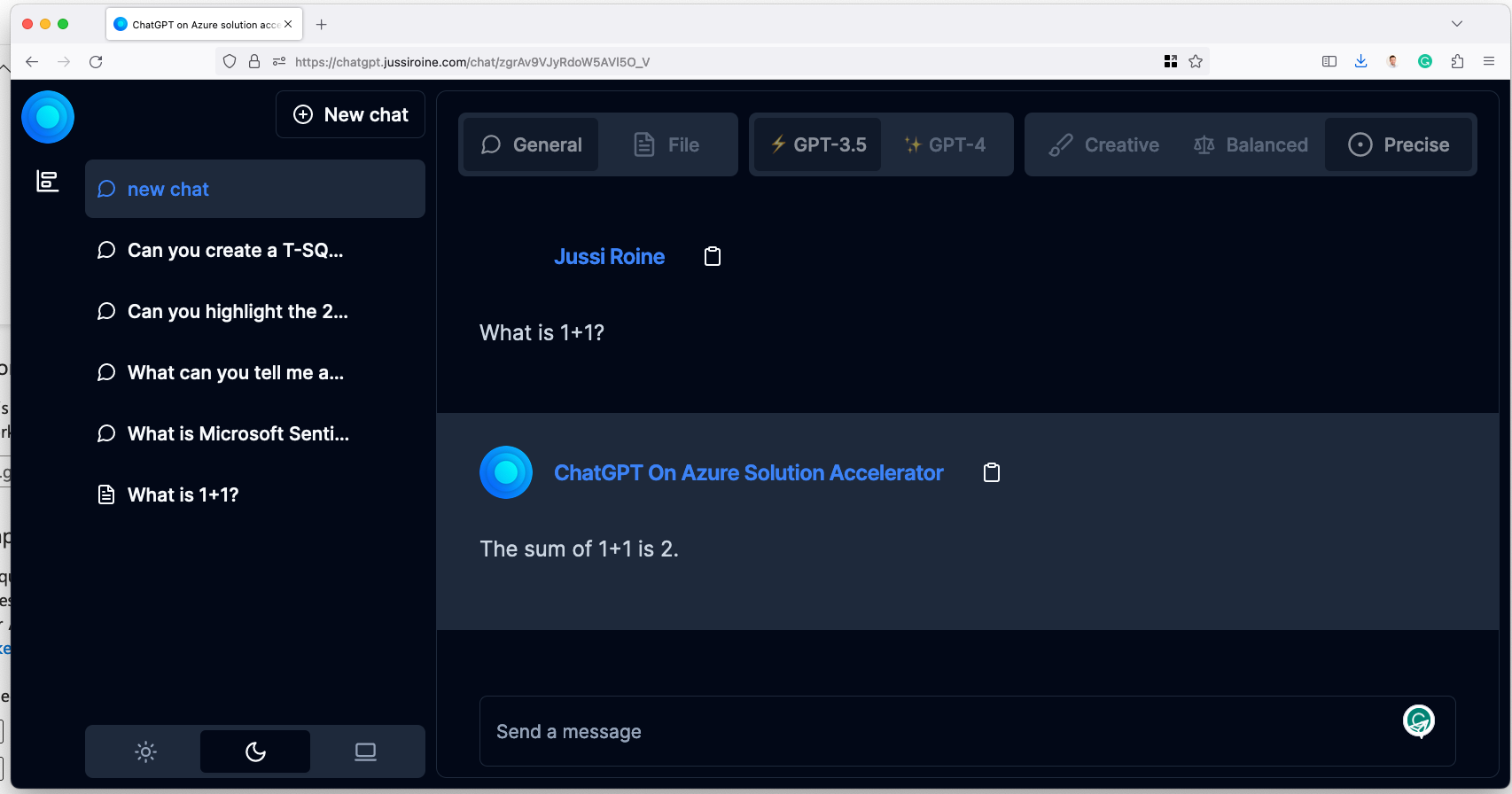

ChatGPT on Azure Solution Accelerator

I noticed last night that Microsoft had opened the repo for ChatGPT on Azure Solution Accelerator. It's a deployable solution that runs on your Azure subscription and provides an almost identical interface from ChatGPT. But you control everything - access, authentication, authorization, data, etc. This means you end up paying for all these resources in time and cost.

The solution comprises a Web App, a Cosmos DB, and Azure OpenAI. Cosmos DB is used to store historical chats. Custom data can also be introduced, which entails deploying Azure Cognitive Search.

All data - prompts (input), results (output), embeddings (custom data), telemetry (usage), and so forth remain within your control. Nothing travels back to Azure OpenAI base models or OpenAI models.

Setting it up

The repo has semi-clear instructions for deploying the solution. A few lessons learned from that experience:

First, fork the repo. This allows you the freedom to change or configure anything within the solution.

Next, you deploy the necessary resources - Azure Web App, App Plan, and Cosmos DB. There's a Bicep template for that, which "just works," as expected. You'll also need to provision the Azure OpenAI instance - and at the time of writing, you'll need to apply for access here, and then apply for access to the more advanced GPT-4 model here. Expect about a week of turnaround time for getting access.

You now deploy the solution from GitHub to your preferred Azure environment using GitHub Actions. This took about two minutes to complete.

Then, you'll need to configure Microsoft Entra ID authentication. It's a frictionless exercise with Azure CLI but typically takes 1-2 minutes to light up, so don't be too fast. I was.

If you're unsure what Azure OpenAI API version to use, it's 2023-03-15-preview.

How does it work?

Once everything is deployed, and you've restarted the Web App once - the equivalent of doing iisreset /noforce from back in the day - the website should be accessible.

First, you'll need to authenticate.

I didn't bother configuring GitHub, so I opted for Microsoft 365.

Old icons are still in use. It's fine.

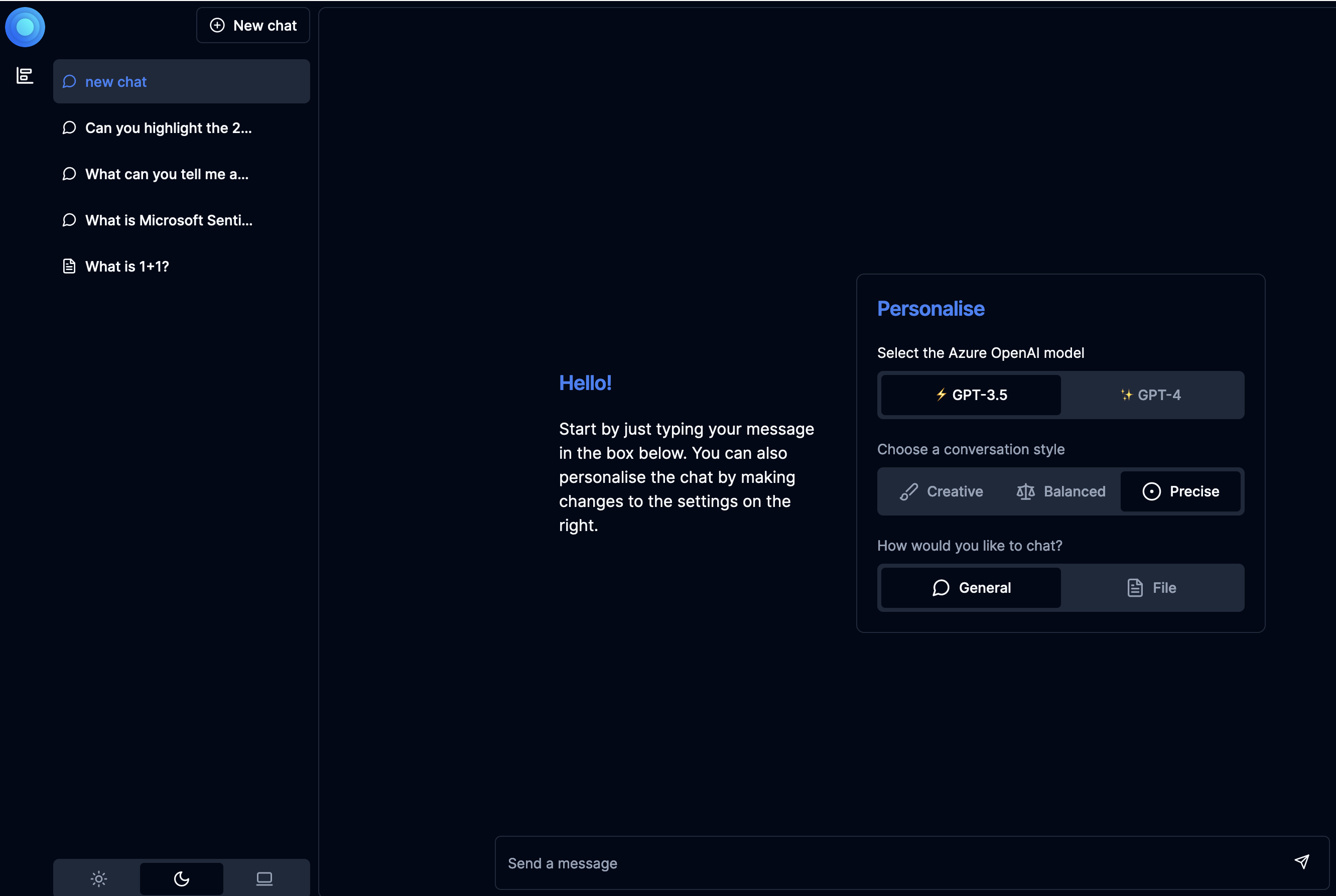

And we're in!

The look and feel is very close to OpenAI's ChatGPT. You can choose between GPT-3.5 and GPT-4 and the few high-level parameters from Azure OpenAI. You cannot upload files since we haven't configured Azure Cognitive Search yet. It's a costly one, at around $250/month, so only deploy it if you plan on using it. The benefit of having that would be the ability to upload custom data as files (.PDF, etc.) and utilize those in the context of the chats.

The default SKU for the Web App Plan is Premium v3, so the app is very responsive and fast.

What does it cost?

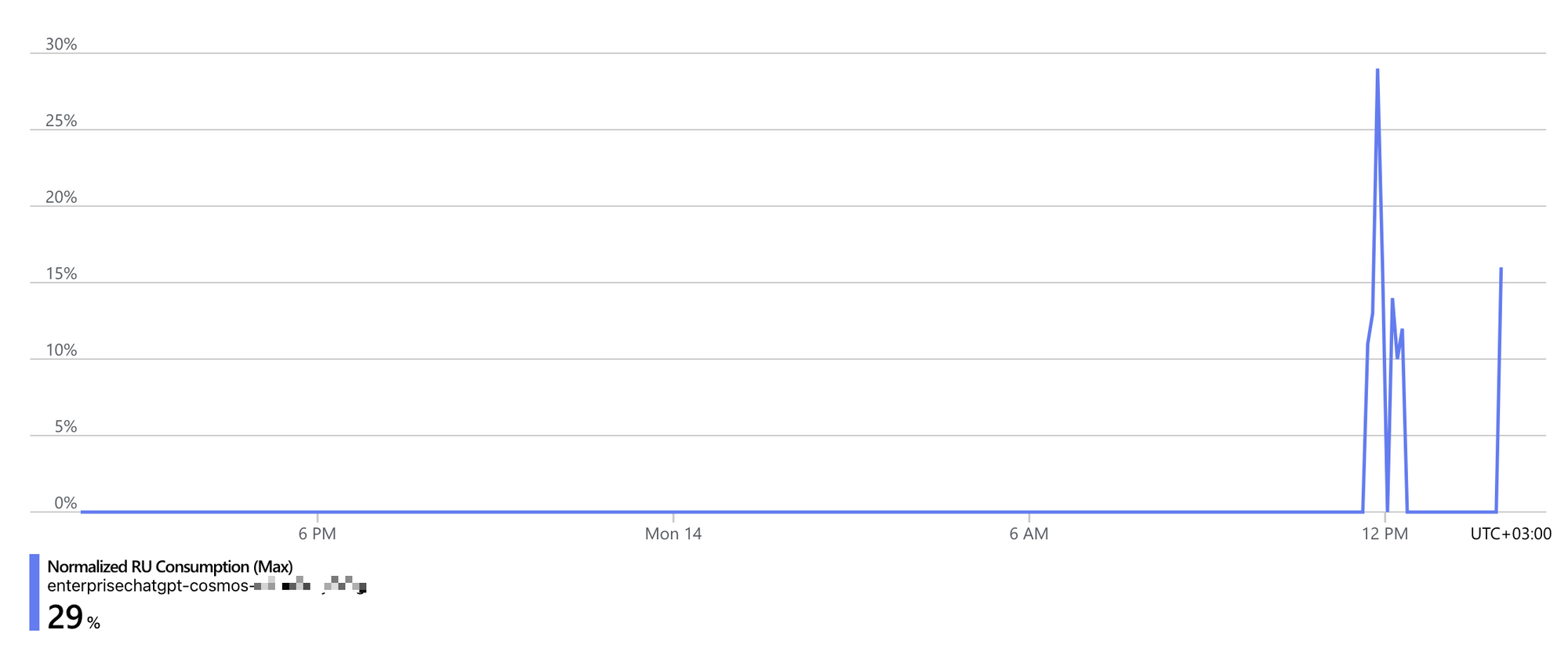

Good question! The Web App and Cosmos DB drive the total cost:

- App Plan (Premium v3 P0V3): 80 €/month

- Cosmos DB: with 400 RU/s, the estimate is about 35 €/month

- Azure OpenAI: based on usage, hard to estimate

I used the service for about 5 minutes, and Cosmos DB request units peaked at 30 %.

As an internal corporate service, it's very affordable. You'll have to set checks and monitoring for excess usage, of course.

A custom domain

If you've been wondering if it is possible to publish this incarnation of ChatGPT with a custom domain - I'm happy to share that it's possible.

I'm running my instance at `https://chatgpt.jussiroine.com/`. Since it's hosted on Azure App Service, you can request an SSL certificate for free. The only limitation is that you must register via CNAME changes to your DNS - the typical A record + TXT will not work.